Here at IT Labs, we love Kubernetes. It’s a platform that allows you to run and orchestrate container workloads. At least, that is what everyone is expecting from this platform at a bare minimum. On their official website, the definition is as follows:

Kubernetes (K8s) is an open-source system for automating deployment, scaling, and management of containerized applications.

So, it adds automated deployments, scaling, and management into the mix as well. Scrolling down their official website, we can also see other features like automated rollouts and rollbacks, load-balancing, self-healing, but one that stands out is the “Run Anywhere” quote saying:

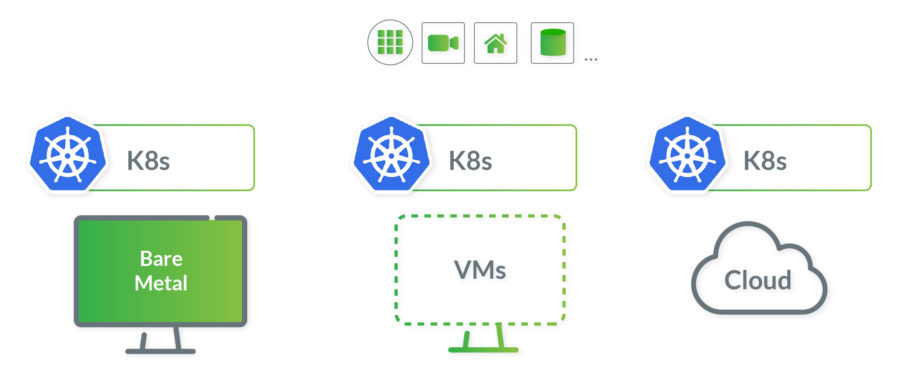

Kubernetes is an open-source giving you the freedom to take advantage of on-premises, hybrid, or public cloud infrastructure, letting you effortlessly move workloads to where it matters to you.

Now, what does this mean? How is this going to help your business? I believe the key part is “letting you effortlessly move workloads to where it matters to you.” Businesses, empowered by Kubernetes, no longer have to compromise on their infrastructure choices.

Kubernetes as a Container Orchestration Platform

A bit of context

When it comes to infrastructure, the clear winners of the past were always the ones that managed to abstract the infrastructure resources (the hardware) as much as possible at that point in time.

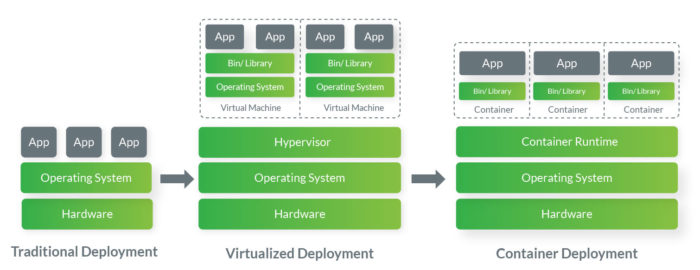

Each decade, a new infrastructure model is introduced that revolutionizes the way businesses are deploying their applications. Back in the 90s, computing resources were the actual physical servers in the backroom or rented at some hosting company. In the 2000s, Virtualization was introduced, and Virtual Machines (VMs) abstracted the physical hardware by turning one server into many servers.

Each decade, a new infrastructure model is introduced that revolutionizes the way businesses are deploying their applications. Back in the 90s, computing resources were the actual physical servers in the backroom or rented at some hosting company. In the 2000s, Virtualization was introduced, and Virtual Machines (VMs) abstracted the physical hardware by turning one server into many servers.

In the 2010s, containers took the stage, taking things even further, by abstracting the application layer that packages code and dependencies together. Multiple containers can run on the same machine and share the operating system (OS) kernel with other containers, each running and protected as an isolated process. In 2013, the open-source Docker Engine popularized containers and made them the underlying building blocks for modern application architectures.

Containers were accepted as the best deployment method, and all major industry players adopted them very quickly, even before they became the market norm. And since their workloads are huge, they had to come up with ways to manage a very large number of containers.

At Google, they have created their own internal container orchestration projects called Borg and Omega, which they used to deploy and run their search engine. Using lessons learned and best practices from Borg and Omega, Google created Kubernetes. In 2014, they open-sourced it and handed over to the Cloud Native Computing Foundation (CNCF).

In the 2010s, the cloud revolution was also happening, where cloud adoption by businesses was experiencing rapid growth. It allowed for even greater infrastructure abstraction by offering different managed services and Platform-as-a-service (PaaS). Offerings that took care of all infrastructure and allowed businesses to be more focused on adding value to their products.

The cloud transformation processes for businesses will continue in the 2020s. It’s been evident that the journey can be different depending on the business use cases, with each company having its own unique set of goals and timelines. What we can foresee is that containers will remain the building blocks for modern applications, with Kubernetes as the best container orchestration platform, thus retaining its crown of being a necessity.

What is Kubernetes

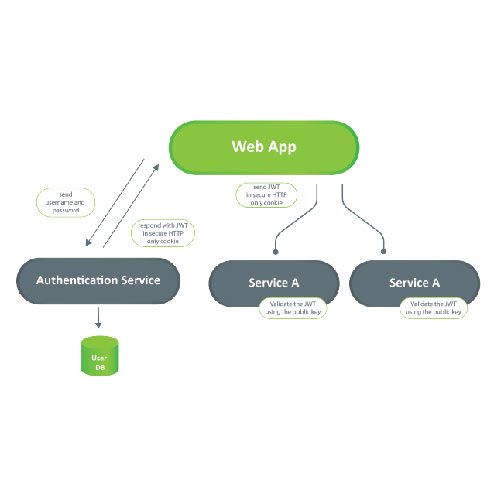

Kubernetes enables easy deployment and management of applications with microservice architecture. It provides a framework to run distributed systems resiliently with great predictability.

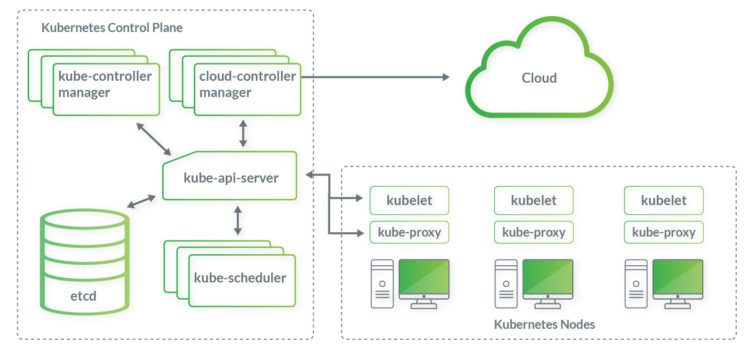

The main component of Kubernetes is the cluster, consisting of a control plane and a set of machines called nodes (physical servers or VMs). The control plane’s components like the Kube-scheduler make global decisions about the cluster and are responding to cluster events, such as failed containers. The nodes are running the actual application workload – the containers.

There are many terms/objects in the Kubernetes world, and we should be familiar with some of them:

- Pod

The smallest deployable units of computing. A group of one or more containers, with shared storage and network resources, containing a specification on how to run the container(s)

- Service

Network service abstraction for exposing an application running on a set of pods

- Ingress

An API object that manages external access to the services in a cluster, usually via HTTP/HTTPS. Additionally, it provides load balancing, SSL termination, and name-based virtual hosting

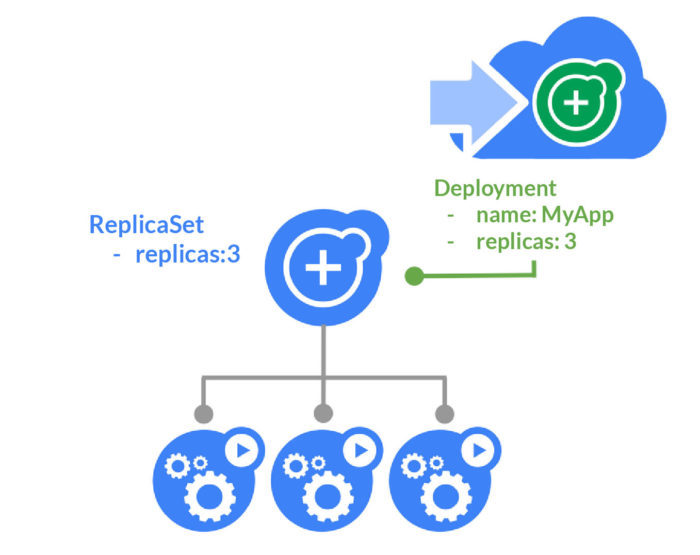

- ReplicaSet

The guarantee of the availability of a specified number of identical pods by maintaining a stable set of replica pods running at any given time

- Deployment

A declarative way of describing the desired state – it instructs Kubernetes how to create and update container instances

Additionally, Kubernetes (as a container orchestration platform) offers:

- Service Discovery and Load Balancing

Automatically load balancing requests across different container instances

- Storage Orchestration

Automatically mount a storage system of your choice, whether its local storage or a cloud provider storage

- Control over resource consumption

Configure how much node CPU and memory each container instance needs

- Self-healing

Automatically restarts failed containers, replace containers when needed, kills containers that don’t respond to a configured health check. Additionally, Kubernetes allows for container readiness configuration, meaning the requests are not advertised to clients until they are ready to serve

- Automated rollout and rollback

Using deployments, Kubernetes automates the creation of new containers based on the desired state, removes existing container and allocates resources to new containers

- Secret and configuration management

Application configuration and sensitive information can be stored separately from the container image, allowing for updates on the fly without exposing the values

- Declarative infrastructure management

YAML (or JSON) configuration files can be used to declare and manage the infrastructure

- Extensibility

Extensions to Kubernetes API can be done through custom resources.

- Monitoring

Prometheus, a CNCF project, is a great monitoring tool that is open source and provides powerful metrics, insights and alerting

- Packaging

With Helm, the Kubernetes package manager, it is easy to install and manage Kubernetes applications. Packages can be found and used for a particular use case. One such example would be the Azure Key Vault provider for Secrets Store CSI driver, that mounts Azure Key Vault secrets into Kubernetes Pods

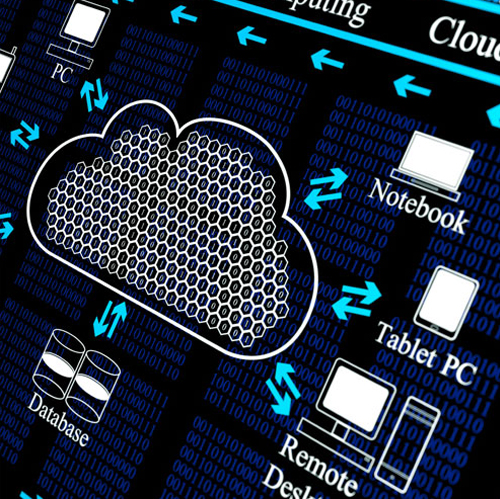

- Cloud providers managed Kubernetes services

As the clear standard for container orchestration, all major cloud providers are offering Kubernetes-as-a-service. Amazon EKS, Azure Kubernetes Service (AKS), Google Cloud Kubernetes Engine (GKE), IBM Cloud Kubernetes Service, and Red Hat OpenShift are all managing the control plane resources automatically, letting business focus on adding value to their products.

Kubernetes as a Progressive Delivery Enabler

Any business that has already adopted agile development, scrum methodology, and Continuous Integration/Continuous Delivery pipelines needs to start thinking about the next step. In modern software development, the next step is called “Progressive Delivery”, which essentially is a modified version of Continuous Delivery that includes a more gradual process for rolling out application updates using techniques like blue/green and canary deployments, A/B testing and feature flags.

Blue/Green Deployment

One way of achieving a zero-downtime upgrade to an existing application is blue/green deployment. “Blue” is the running copy of the application, while “Green” is the new version. Both are up at the same time at some point, and user traffic starts to redirect to the new, “Green” version seamlessly.

In Kubernetes, there are multiple ways to achieve blue/green deployments. One way is with deployments and replicaSets. In a nutshell, the new “Green” deployment is applied to the cluster, meaning two versions (two sets of containers) are running at the same time. A health check is issued for the “Green” deployment container replicas. If the health check pass, the load balancer is updated with the new “Green” deployment container replicas, while the “Blue” container replicas are removed. If the health check fails, then the “Green” deployment container replicas are stopped, and an alert is sent to DevOps, while the current “Blue” version of the application continues to run and serve end-user requests.

In Kubernetes, there are multiple ways to achieve blue/green deployments. One way is with deployments and replicaSets. In a nutshell, the new “Green” deployment is applied to the cluster, meaning two versions (two sets of containers) are running at the same time. A health check is issued for the “Green” deployment container replicas. If the health check pass, the load balancer is updated with the new “Green” deployment container replicas, while the “Blue” container replicas are removed. If the health check fails, then the “Green” deployment container replicas are stopped, and an alert is sent to DevOps, while the current “Blue” version of the application continues to run and serve end-user requests.

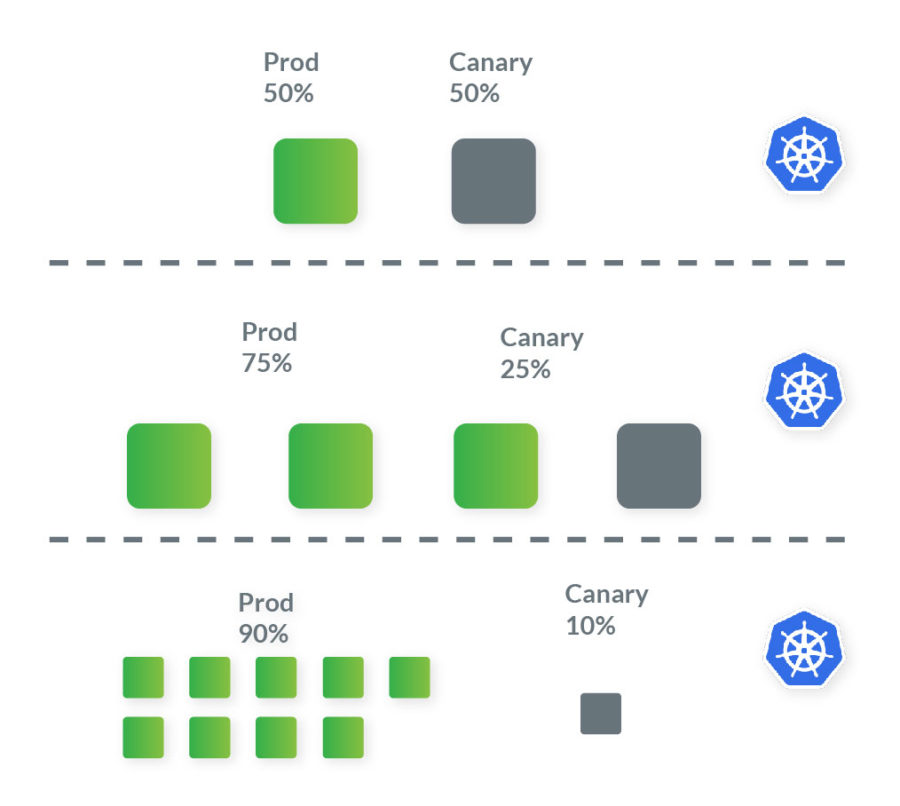

Canary Deployments

This canary deployment strategy is more advanced and involves incremental rollouts of the application. The new version is gradually deployed to the Kubernetes cluster while getting a small amount of live traffic. A canary, meaning a small subset of user requests, are redirected to the new version, while the current version of the application still services the rest. This approach allows for the early detection of potential issues with the new version. If everything is running smoothly, the confidence of the new version increases, with more canaries created, converging to a point where all requests are serviced there. At this point, the new version gets promoted to the title’ current version.’ With the canary deployment strategy, potential issues with the new live version can be detected earlier. Also, additional verification can be done by QA, such as smoke tests, new feature testing through feature flags, and collection of user feedback through A/B testing.

There are multiple ways to do canary deployments with Kubernetes. One would be by using the ingress object, to split the traffic between two versions of the running deployment container replicas. Another would be to use a progressive delivery tool like Flagger.

There are multiple ways to do canary deployments with Kubernetes. One would be by using the ingress object, to split the traffic between two versions of the running deployment container replicas. Another would be to use a progressive delivery tool like Flagger.

Kubernetes as an Infrastructure Abstraction Layer

Since more businesses are adopting cloud, cloud providers are maturing and competing with their managed services and offerings. Businesses want to optimize their return on investment (ROI), to use the best offer from each cloud provider, and to preserve autonomy by lowering cloud vendor lock-in. Some are obligated to use a combination of on-premise/private clouds, down to governance rules or nature of their business. Multi-cloud environments are empowering businesses by allowing them not to compromise their choices.

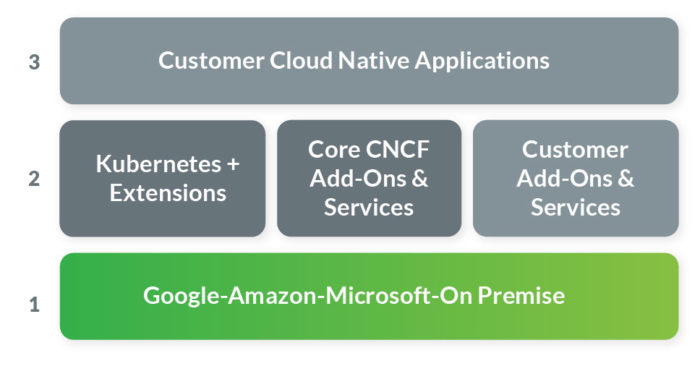

Kubernetes is infrastructure and technology agnostic, running on any machine, on Linux and Windows OS, on any infrastructure, and it is compatible with all major cloud providers. When we are thinking about Kubernetes, we need to start seeing it as an infrastructure abstraction layer, even on top of the cloud.

Kubernetes is infrastructure and technology agnostic, running on any machine, on Linux and Windows OS, on any infrastructure, and it is compatible with all major cloud providers. When we are thinking about Kubernetes, we need to start seeing it as an infrastructure abstraction layer, even on top of the cloud.

If a business decides on a cloud provider and later down the road, that decision proves to be wrong, with Kubernetes that transition to a different cloud provider is much less painful. Migration to a multi-cloud environment (gradual migration), hybrid environment, or even on-premise can be achieved without redesigning the application and rethinking the whole deployment. There are even companies that provide the necessary tools for such transitions, like Kublr or Cloud Foundry. The evolving needs of businesses have to be met one way or the other, and the portability, flexibility, and extensibility that Kubernetes offers should not be overlooked.

This portability is of great benefit to developers as well, since now, the ability to abstract the infrastructure away from the application is available. The focus would be on writing code and adding value to the product, while still retaining considerable control over how the infrastructure is set up, without worrying where the infrastructure will be.

“We need to stop writing infrastructure… One day there will be cohorts of developers coming through that don’t write infrastructure code anymore. Just like probably not many of you build computers.” – Alexis Richardson, CEO, Weaveworks

Kubernetes as the Cloud-Native Platform

Cloud-native is an approach to design, build, and run applications that have a set of characteristics and a deployment methodology that is reliable, predictable, scalable, and high performant. Typically, cloud-native applications have microservices architecture that runs on lightweight containers and is using the advantages of cloud technologies.

The ability to rapidly adapt to changes is fundamental for business in order to have continued growth and to remain competitive. Cloud-native technologies are meeting these demands, providing the automation, flexibility, predictability, and observability needed to manage this kind of application.

Conclusion

Conclusion

With all being said, it can be concluded that Kubernetes is here to stay. That’s why we use it extensively here at IT Labs, future-proofing our client’s products and setting them up for continuing success. Why? Because It allows businesses to maximize the potential of the cloud. Some predict that it will become an invisible essential of all software development. It has a large community that puts great effort to build and improve this cloud-native ecosystem. Let’s be part of it.

Kostadin Kadiev

Technical Lead at IT Labs