Nowadays, serverless computing, also known as ‘Function as a Service’ (FaaS), is one of the most popular cloud topics kicking around. It’s the fastest growing extended cloud service in the world. Moreover, it gained big popularity among enterprises, and there’s been a significant shift in the way companies operate. Today, building a reliable application might not be enough. Especially for enterprises that have strong competition, sizeable demands on cost optimization, and faster go-to-market resolution metrics. By using a serverless architecture, companies don’t need to worry about virtual machines or pay extra for their idle hardware, and they can easily scale up or down the applications/services as the business requires.

Software development has entered a new era due to the way serverless computing has impacted businesses, through its offer of high availability and unlimited scale. In a nutshell, serverless computing is a computing platform that allows its users to focus solely on writing code, while not having to be concerned with the infrastructure because the cloud provider manages the allocation of resources. This means that the cloud vendors, like AWS and Azure, can take the care of the operational part, while companies are focused on the ‘business’ part of their business. In this way, companies can bring solutions to the market at a much faster rate at less cost. Furthermore, serverless computing unloads the burden of taking care of the infrastructure code, and at the same time, it’s event-driven. Since the cloud handles infrastructure demands, you don’t need to write and maintain code for handling events, queues, and other infrastructure layer elements. You just need to focus on writing functional features reacting to a specific event related to your application requirements.

The unit of deployment in serverless computing is an autonomous function, this is the reason why the platform is thus called a Function as a Service (FaaS).

The core concepts of FaaS are:

- Full abstraction of servers away from developers

- Cost based on consumption and executions, not the traditional server instance sizes

- Event-driven services that are instantaneously scalable and reliable.

The benefits of using FaaS in software architecture are many; in short, it reduces the workload on developers and increases time focused on writing application code. Furthermore, In FaaS fashion, it is necessary to build modular business logic and pack it to minimal shippable unit size. If there is a spike workload on some functions, FaaS enables you to scale your functions automatically and independently, only on the overloaded functions, as opposed to scaling your entire application. Thus avoiding the cost-overhead of paying for idle resources. If you decide to go with FaaS, you’ll get built-in availability and fault tolerance for your solution and server infrastructure management by the FaaS cloud provider. Overall, you’ll get to deploy your code directly to the cloud, run functions as a service, and pay as you go for invocations and resources used. If your functions are idle, you won’t be charged for them.

In a FaaS-based application, scaling happens at the function level, and new functions are raised as they are needed. This lower granularity avoids bottlenecks. The downside of functions is the need for orchestration since many small components (functions) need to communicate between themselves. Orchestration is the extra configuration work that is necessary to define how functions discover each other or talk to each other.

In this blog post, I will compare the FaaS offerings of the two biggest public cloud providers available, AWS and Azure, giving you direction to choose the right one for your business needs.

Azure Functions and AWS Lambda offer similar functionality and advantages. Both include the ability to pay only for the time that functions run, instead of continuously charging for a cloud server even if it’s idle. However, there are some important differences in pricing, programming language support, and deployment between the two serverless computing services.

Amazon Lambda

In 2014 Amazon became the first public cloud provider to release a serverless, event-driven architecture, and AWS Lambda continues to be synonymous with the concept of FaaS. Undoubtedly, AWS Lambda is one of the most popular FaaS out there.

AWS Lambda has built-in support for Java, Go, PowerShell, Node.js, C#, Python, and Ruby programming languages and is working on bringing in support for other languages in the future. All these runtimes are maintained by AWS and are provided on top of the Amazon Linux operating system.

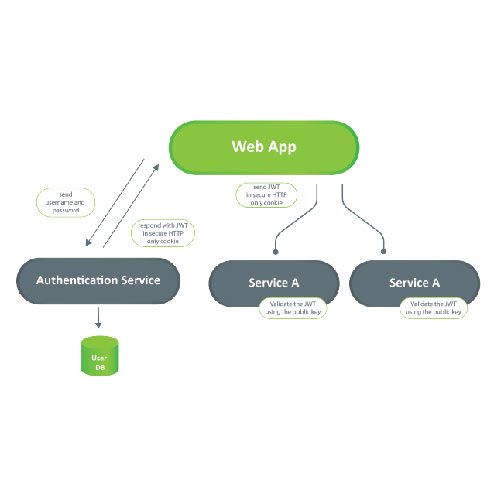

AWS Lambda has one straightforward programming model for integration with other AWS services. Each service that integrates with Lambda sends data to your function in JSON as an event, and the function may return JSON as output. The structure of the event document is different for each event type and defines the schema of those objects, which are documented and defined in language SDKs.

AWS Lambda offers a rich set of dynamic, configurable triggers that you can use to invoke your functions. Natively, the AWS Lambda function can be invoked only from other AWS services. You can configure a synchronous HTTP trigger using API Gateway or Elastic Load Balancer, a dynamic trigger on a DynamoDB action, an asynchronous file-based trigger based on Amazon S3 usage, a message-based trigger based on Amazon SNS, and so on.

As a hosting option, you’re able to choose memory allocation between 128 MB to 3 GB for AWS Lambda. The CPU functions and associated running costs vary with chosen allocations. For the optimal performances and cost-saving on your Lambda functions, you need to pay attention to balancing the memory size and execution time of the function. The default function timeout is 3 seconds, but it can be increased to 15 minutes. If your function typically takes more than 15 minutes, then you should consider finding some alternatives, like the AWS Step function or back to the traditional solution on EC2 instances.

AWS Lambda supports deploying source code uploaded as a ZIP package. There are several third-party tools, as well as AWS’s own CodeDeploy or AWS SAM tool that uses AWS CloudFormation as the underlying deployment mechanism, but they all do more or less the same under the hood: package to ZIP and deploy. The package can be deployed directly to Lambda, or you can use an Amazon S3 bucket and then upload it to Lambda. As a limitation, the zipped Lambda code package should not exceed 50MB in size, and the unzipped version shouldn’t be larger than 250MB. It is important to note that versioning is available for Lambda functions, whereas it doesn’t apply to Azure Functions.

With AWS, similar to other FaaS providers, you only pay for the invocation request and the compute time needed to run your function code. It comes with 1 million free requests with 400,000 GB-seconds per month. It’s important to note that AWS Lambda charges for full provisioned memory capacity. The service charge for at least 100 ms and 128MB for each execution, rounding the time up to the nearest 100 ms.

AWS Lambda works on the concept of layers as a way to centrally manage code and data that is shared across multiple functions. In this way, you can put all the shared dependencies in a single zip file and upload the resource as a Lambda Layer.

Orchestration is another important feature that FaaS doesn’t provide out of the box. The question of how to build large applications and systems out of those tiny pieces is still open, but some composition patterns already exist. In this way, Amazon enables you to coordinate multiple AWS Lambda functions for complex or long-running tasks by building workflows with AWS Step Functions. Step Functions lets you define workflows that trigger a collection of Lambda functions using sequential, parallel, branching, and error-handling steps. With Step Functions and Lambda, you can build stateful, long-running processes for applications, and back-ends.

AWS Lambda functions are built as standalone elements, meaning each function acts as its own independent program. This separation also extends to resource allocation. For example, AWS provisions memory on a per-function basis rather than per application group. You can install any number of functions in a single operation, but each one has its own environment variables. Also, AWS Lambda always reserves a separate instance for a single execution. Each execution has its exclusive pool of memory and CPU cycles. Therefore, the performance is entirely predictable and stable.

AWS has been playing this game longer than all other serverless providers. However, there are no established industry-wide benchmarks, many claim that AWS Lambda is better for rapid scale-out and handling massive workloads, both for web APIs and queue-based applications. The bootstrapping delay effect (cold starts) is also less significant with Lambda.

Azure Functions

Azure Functions by Microsoft Azure, as a new player in the field of serverless computing, was introduced in the market in 2016, although they were offering PaaS for several years before that. Today, Microsoft Azure with Azure Functions is established as one of the biggest FaaS providers.

Azure Functions has built-in support for C#, JavaScript, F#, Node.js, Java, PowerShell, Python, and TypeScript programming languages and is working on bringing in support for additional languages in the future. All runtimes are maintained by Azure, provided on top of both Linux and Windows operating systems.

Azure Functions have a more sophisticated programming model based on triggers and bindings, helping you respond to events and seamlessly connect to other services. A trigger is an event that the function listens to. The function may have any number of input and output bindings to pull or push extra data at the time of processing. For example, an HTTP-triggered function can also read a document from Azure Cosmos DB and send a queue message, all done declaratively via binding configuration.

With Microsoft Azure Functions, you’re given a similar set of dynamic, configurable triggers that you can use to invoke your functions. They allow access via a web API, as well as invoking the functions based on a schedule. Microsoft also provides triggers from their other services, such as Azure Storage, Azure Event Hubs, and there is even support for SMS-triggered invocations using Twilio, or email-triggered invocations using SendGrid. It’s important to note that Azure Functions comes with HTTP endpoint integration out of the box, and there is no additional cost for this integration, whereas this isn’t the case for AWS Lambda.

Microsoft Azure offers multiple hosting plans for your Azure Functions, from which you can choose the hosting plan that fits your business needs. There are three basic hosting plans available for Azure Functions: Consumption plan, Premium plan, and Dedicated (App Service) plan. The hosting plan you choose dictates how your functions scaled, what resources are available to each function, and the costs. For example, if you choose The Consumption plan that has the lowest management overhead and no fixed-cost component, which makes it the most serverless hosting option on the list, each instance of the Functions host is limited to 1.5 GB of memory and one CPU. An instance of the host is the entire function app, meaning all functions within a function app share resources within an instance and scale at the same time. Function apps that share the same Consumption plan are scaled independently. In Azure Functions, the Consumption Plan default timeout is 5 minutes and can be increased to 10 minutes. If your function needs to run more than 10 minutes, then you can choose Premium or App Service plan, increasing the timeout to 30 minutes or even stretching it to unlimited.

The core engine behind the Azure Functions service is the Azure Functions Runtime. When a request is received, the payload is loaded, incoming data is mapped, and finally, the function is invoked with parameter values. When the function execution is completed, an outgoing parameter is passed back to the Azure Function runtime.

Microsoft Azure introduces Durable Functions extension as a library. Bringing workflow orchestration abstractions to code. It comes with several application patterns to combine multiple serverless functions into long-running stateful flows. The library handles communication and state management robustly and transparently while keeping the API surface simple. The Durable Functions extension is built on top of the Durable Task Framework, an open-source library that’s used to build workflows in code, and it’s a natural fit for the serverless Azure Functions environment.

Also, Azure enables you to coordinate multiple functions by building workflows with Azure Logic Apps service. In this way, functions are used as steps in those workflows, allowing them to stay independent but still solve significant tasks.

Azure Functions offers a richer set of deployment options, including integration with many code repositories. It doesn’t, however, support versioning of functions. Also, functions are grouped on a per-application basis, with this being extended to resource provisioning as well. This allows multiple Azure Functions to share a single set of environment variables instead of specifying their environment variables independently.

The consumption plan has a similar payment model as AWS. You only pay for the number of triggers and the execution time. It comes with 1 million free requests with 400,000 GB-seconds per month. It’s important to note that Azure Functions measures the actual average memory consumption of executions. If Azure Function’s executions share the instance, the memory cost isn’t charged multiple times but shared between executions, which may lead to noticeable reductions. On the other hand, The Premium plan provides enhanced performance and is billed on a per-second basis based on the number of vCPU-s and GB-s your Premium Functions consume. Furthermore, if App Service is already being used for other applications, you can run Azure Functions on the same plan for no additional cost.

Features: AWS Lambda vs Azure Function

(click to view the comparison)

Conclusion

As we see from a comparison of Azure Functions vs. AWS Lambda, regardless of which solution you choose, the serverless computing strategy can solve many challenges, saving your organization significant effort, relative to traditional software architecture approaches.

Although AWS and Azure have different architecture approaches for their FaaS services, both offer many similar capabilities, so it’s not necessarily a matter of one provider being “better” or “worse” than the other. Moreover, if you get into the details, you will see a few essential differences between the two of them. However its safe to say, it’s unlikely your selection will be based on these differences.

AWS has been longer on the market for serverless services. As good as it sounds, this architecture is not one-size-fits-all. If your application is dependent upon a specific provider, it can be useful to tie yourself strongly into their ecosystem by leveraging service-provided triggers for executing your app’s logic. For example, if you run a Windows-dominant environment, you might find that Azure Functions is what you need. But if you need to select one option of these two or if you switch among providers, it’s crucial to adjust your mindset and coding to match the best practices suggested by that particular provider.

To summarize, this post aims to point out the differences between the cloud providers, thus helping you choose the right FaaS that best suit’s your business.

Bojan Stojkov

Senior BackEnd Engineer at IT Labs