AWS Provisioned concurrency – why use it

Elvira Sorko

Senior Back End Engineer at IT Labs

In my company, we’ve recently been working on a serverless project that is using AWS Lambda functions for backend execution of REST API Gateway. But with Lambda functions, cold starts were our greatest concern. Generally, our Lambda functions are written with Java and .NET, which often experience cold starts that last for several seconds! Reading different articles, we found that this was a concern for many others in the serverless community. However, AWS has heard the concerns and has provided the means to solve the problem. AWS in 2019 announced Provisioned Concurrency, a feature that allow Lambda customers to not have to worry about cold starts anymore.

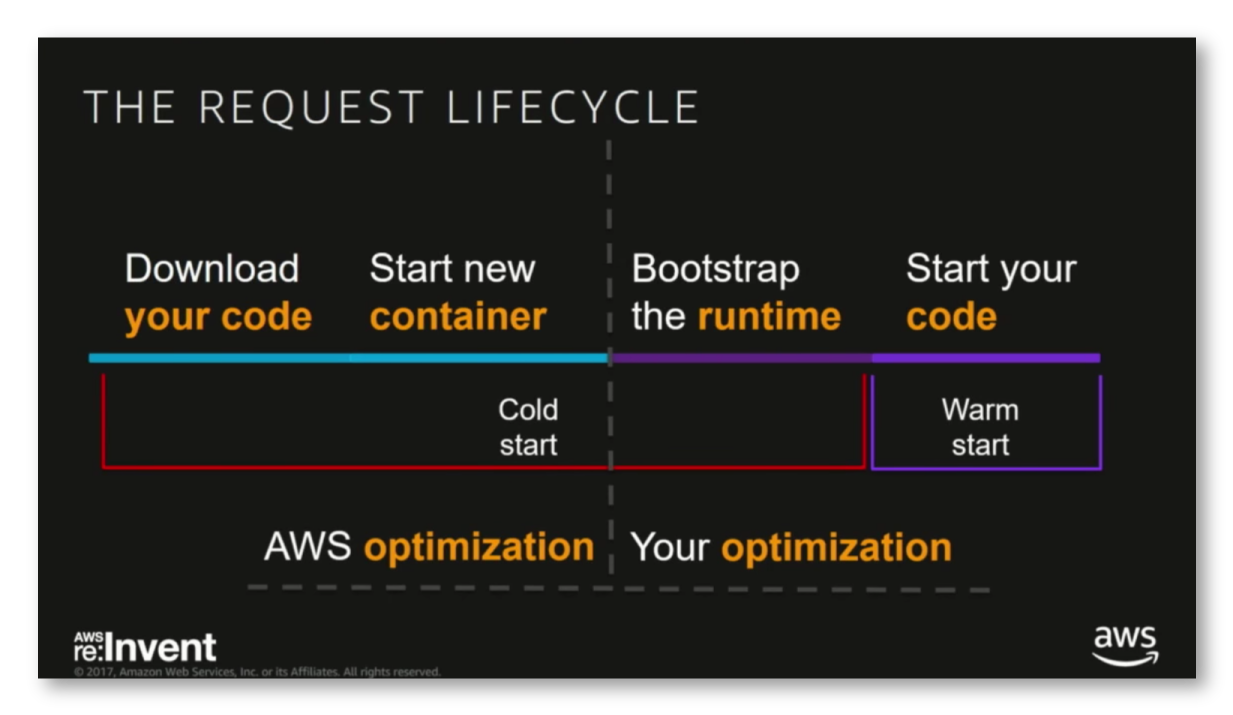

So, if you have Lambda function and just deployed as a serverless service, or if it has not been invoked in some time, your function will be cold. This means that when you invoke a Lambda function, the invocation is routed to an execution environment to process the request. If a new event trigger did occur to invoke a Lambda function, or the function has not been used for some time, a new execution environment would need to be instantiated, the runtime loaded in, your code and all of its dependencies imported and finally your code executed. Depending on the size of your deployment package, and the initialization time of the runtime and of your code, this process could take more seconds before any execution actually started. This latency is usually referred to as a “cold start”, and you can monitor it in the X-Ray as initialization time. However, after execution, this micro VM that took some time to spin up is kept available afterwards for anywhere up to an hour and if a new event trigger comes in, then execution could begin immediately.

Our first try to prevent cold starts, was to use warm-up plugin Thundra (https://github.com/thundra-io/thundra-lambda-warmup). Usage of this warm-up plugin in our Lambda functions requires implementing some branching logic that determines whether this was a warm–up execution or an actual execution. However, while the warm-up plugin didn’t cost anything, except the time spent on implementation in our existing Lambda functions code, the results were not satisfactory.

Then we decided to set aside a certain budget, as AWS services costs money, and use the AWS feature Provisioned Concurrency. As the AWS documentation says, this is a feature that keeps functions initialized and hyper-ready to respond in double-digit milliseconds. It works for all Lambda runtimes and requires no code change to existing functions. Great! That’s what we needed.

How it works

When you enable Provisioned Concurrency for a function, the Lambda service will initialize the requested number of execution environments so they can be ready to respond to invocations. With provisioned concurrency, the worker nodes will reside in the state with your code downloaded and underlying container structure all set. So, if you expect spikes in traffic, it’s good for you to provision a higher number of worker nodes. If we have more incoming invocations and provisioned worker nodes can’t satisfy these requests, then the overflow invocations are handled conventionally with on-demand worker nodes being initialized per the request.

It is important to know that you pay for the amount of concurrency that you configure and for the period of time that you configure it. When Provisioned Concurrency is enabled for your function and you execute it, you also pay for Requests and Duration based on the prices in the AWS documentation. If the concurrency for your function exceeds the configured concurrency, you will be billed for executing the excess functions at the rate outlined in the AWS Lambda Pricing section.

The Lambda free tier does not apply to functions that have Provisioned Concurrency enabled. If you enable Provisioned Concurrency for your function and execute it, you will be charged for Requests and Duration.

Configuring Provisioned Concurrency

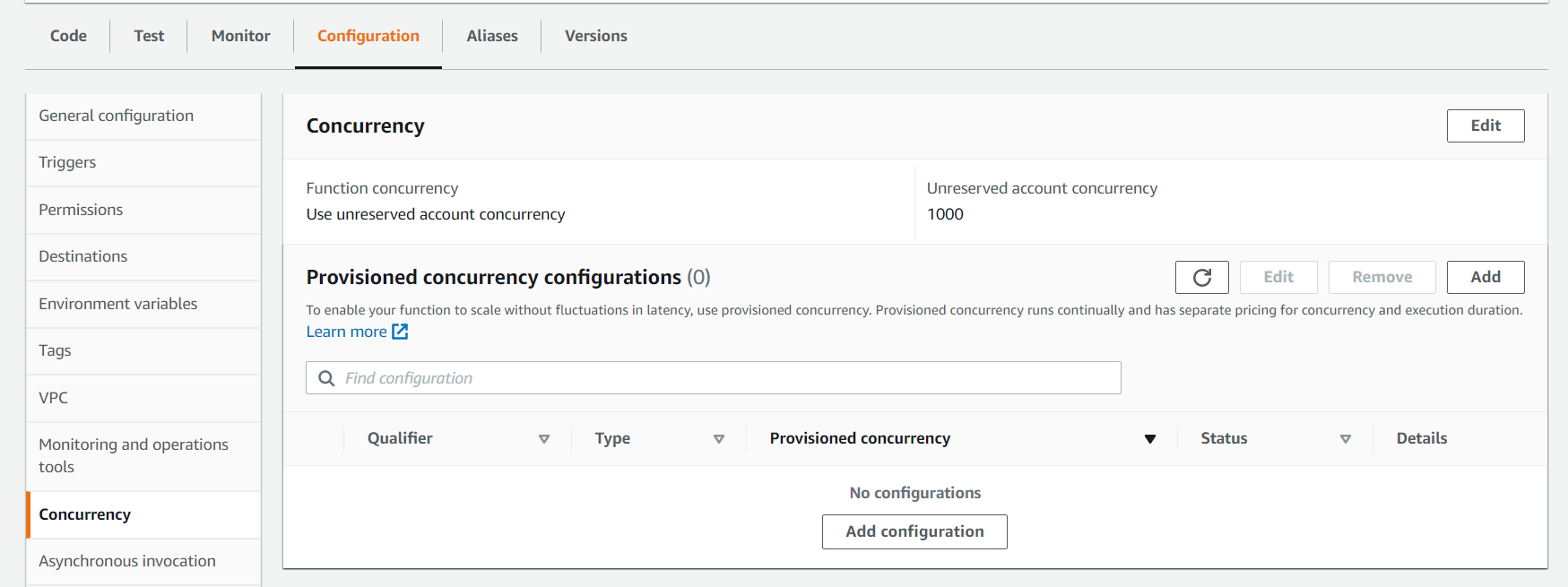

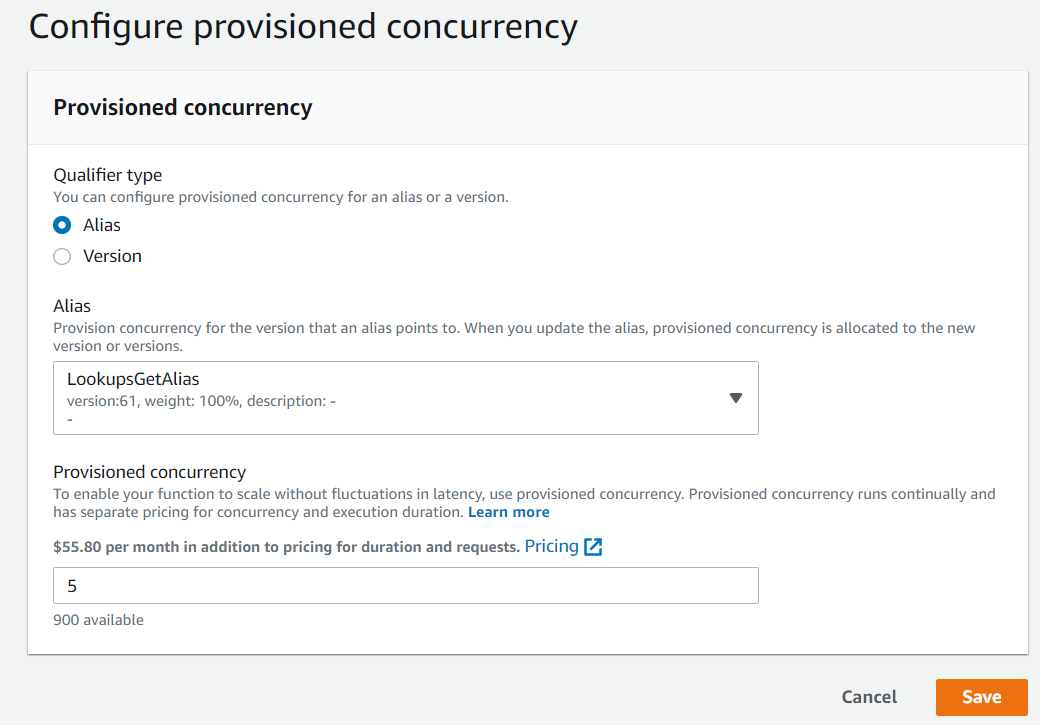

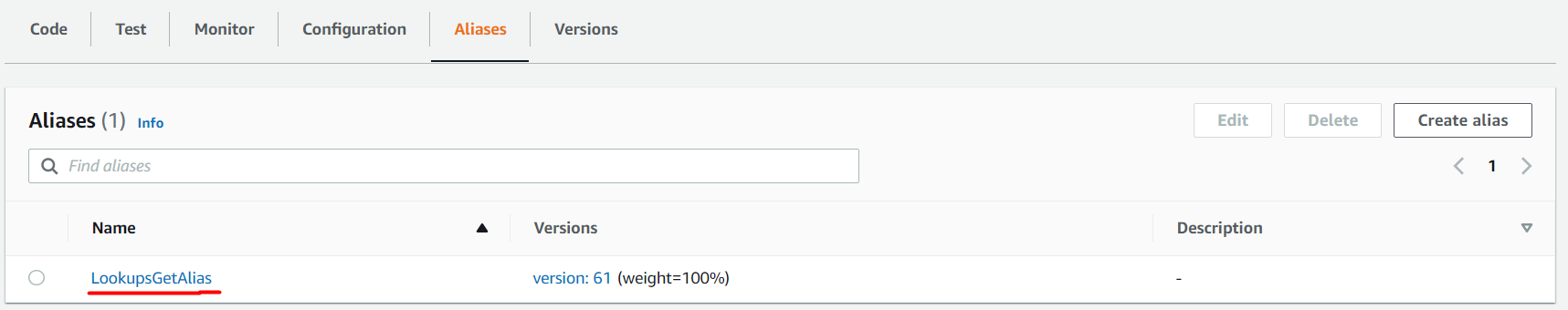

It is important to know that Provisioned concurrency can be configured ONLY on Lambda Function ALIAS or VERSION. You can’t configure it against the $LATEST alias, nor any alias that points to $LATEST.

Provisioned Concurrency can be enabled, disabled and adjusted on the fly using the AWS Console, AWS CLI, AWS SDK or CloudFormation.

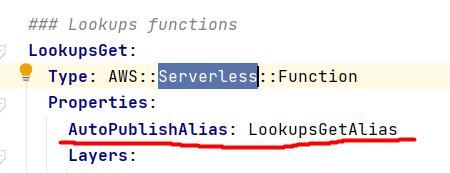

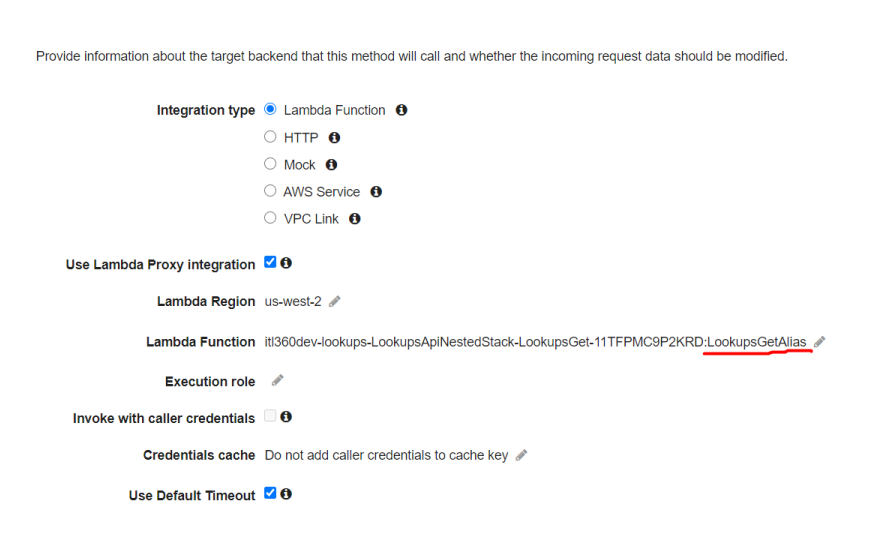

In our case, we selected the alias LookupsGetAlias that we keep updated to the latest version using the AWS SAM (Serverless Application Model) AutoPublishAlias function preference in our SAM template.

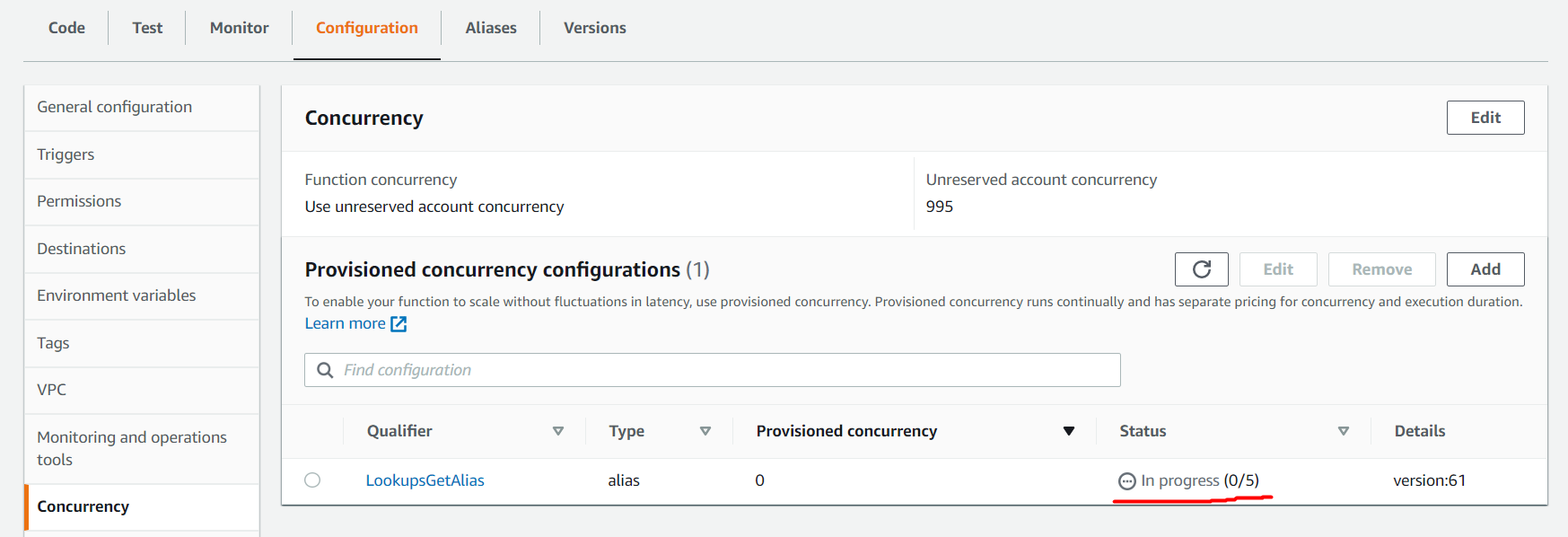

Provisioned concurrency enabling can take a few minutes (time needed to prepare and start the execution environments) and you can check its progress in the AWS Console. During this time, the function remains available and continues the work.

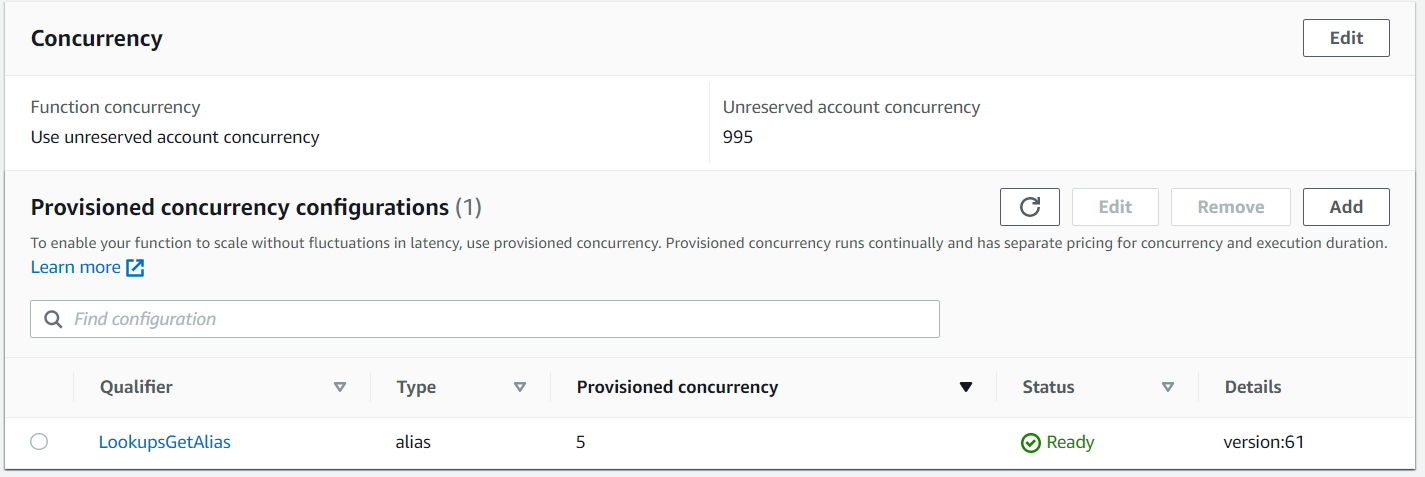

Once fully provisioned, the Status will change to Ready and invocations are no longer executed with regular on-demand worker nodes.

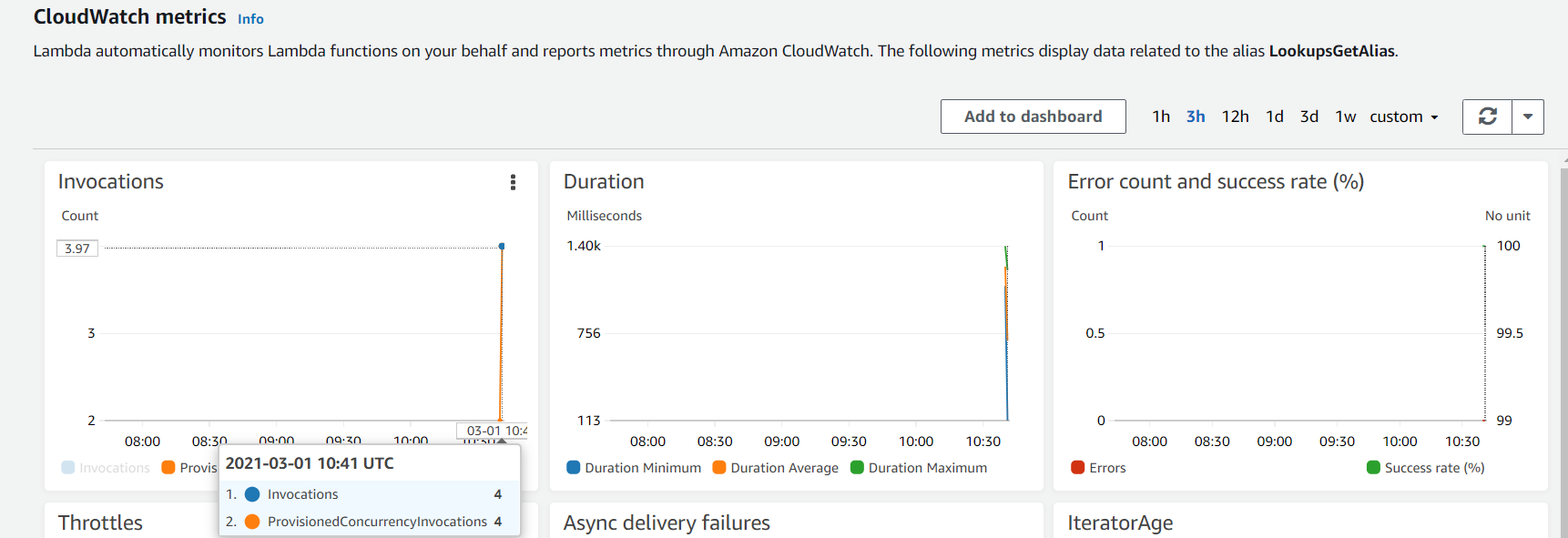

You can check that invocations are handled by Provisioned Concurrency by monitoring the Lambda function Alias metrics. You should execute the Lambda function and select the Lambda function Alias in the AWS console and go to monitor Metrics.

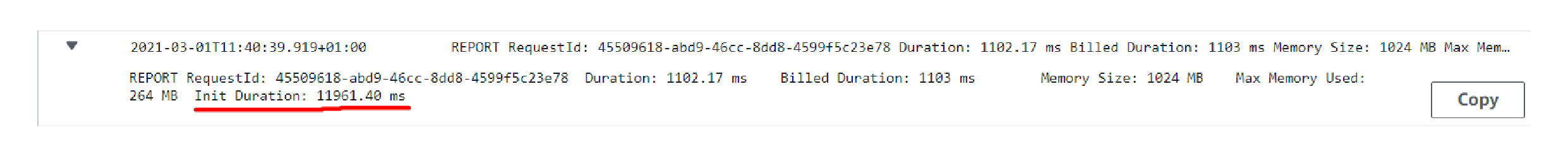

However, as before, the first invocation would still report as the Init Duration (the time it takes to initialize the function module) in the REPORT message in CloudWatch Logs. This init duration no longer happens as part of the first invocation. Instead, it happens when Lambda provisioned the Provisioned Concurrency. The duration is included in the REPORT message here purely for the sake of reporting it somewhere.

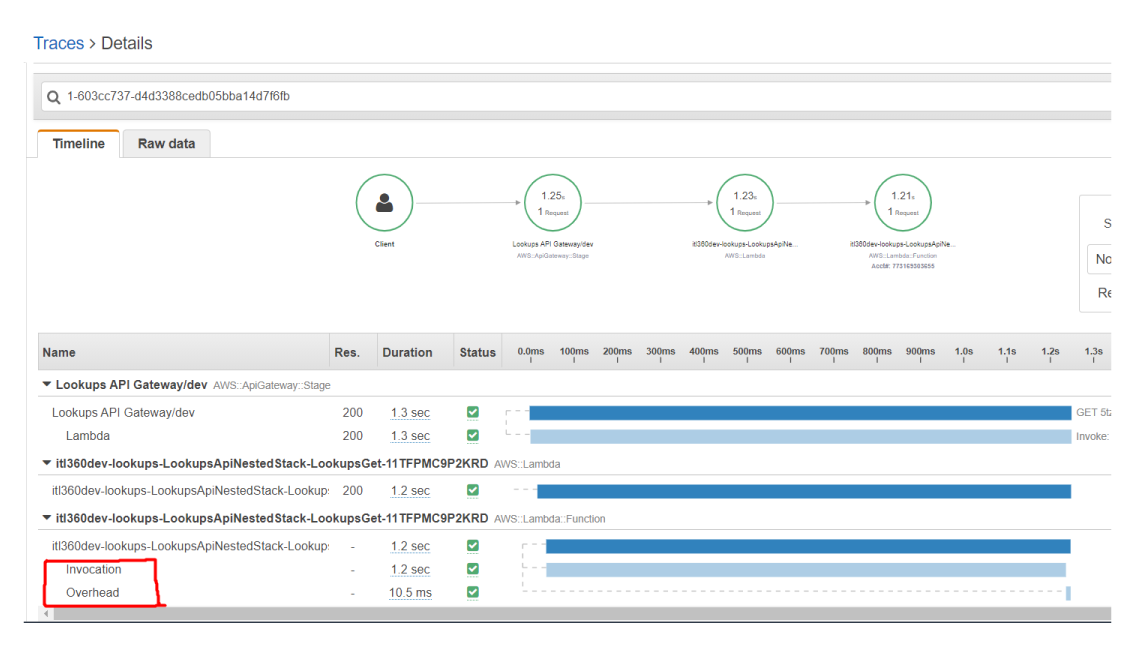

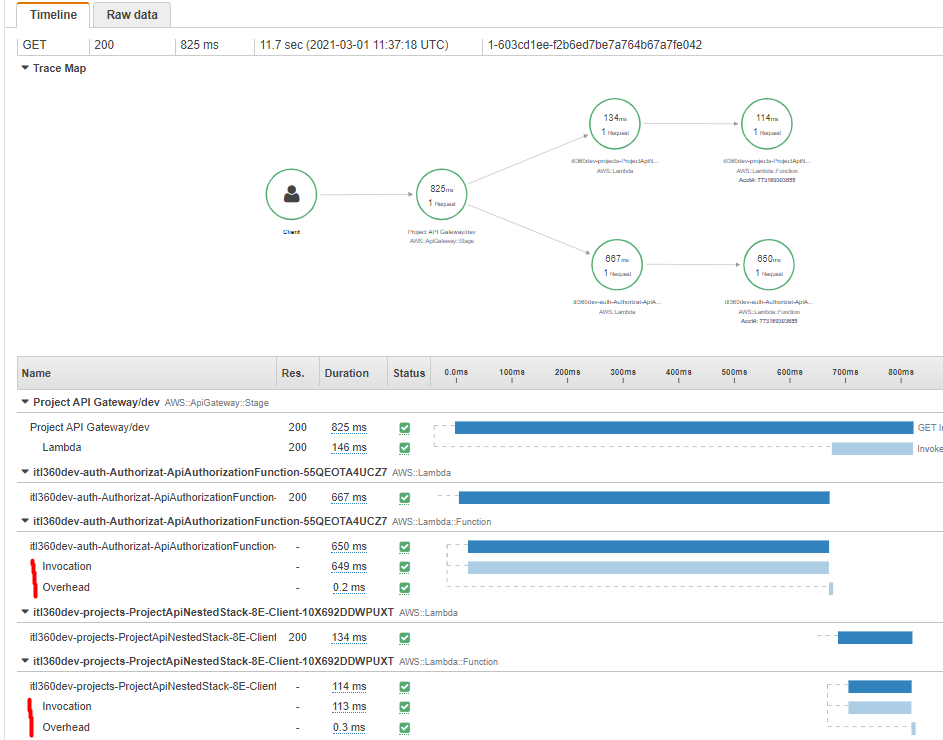

But, if you enable X-Ray tracing for Lambda function, you will find that no initialization time is registered, only execution time.

If you trigger Lambda function via Amazon API Gateway using the LAMBA_PROXY as Integration request, you will need to set Lambda function Alias in the Lambda function reference.

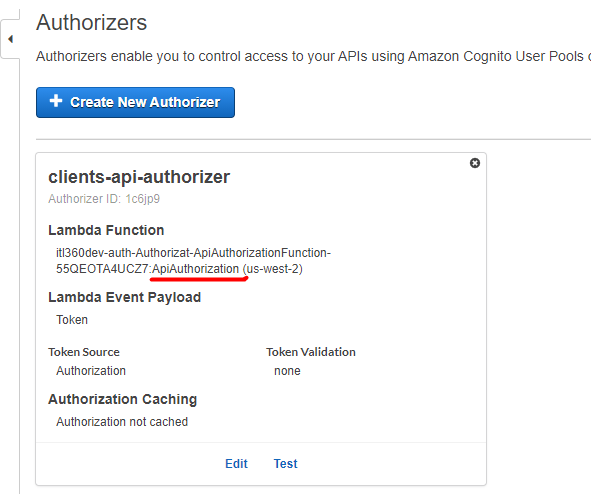

Another important thing to keep in mind when using Amazon API Gateway, Lambda functions and you want to prevent cold starts is to provide Provisioned Concurrency to all Lambda functions used in the API GW. For example, if you are using custom Authorizer in the API GW Authorizers, you should do the following:

- Configure Provisioned Concurrency on custom authorizer Lambda function Alias

- Use Lambda function Alias reference in the API GW Authorizers.

- Set Permissions with Resource-Based policy on custom authorizer Lambda function to be able to invoke with defined API GW.

You can track the performance of your underlying services by enabling AWS X-Ray tracing. Be aware of AWS X-Ray pricing.

Otherwise, end user will experience longer load time, caused by custom authorizer lambda function initialization. We can see this in the AWS X-Ray traces by enabling X-Ray tracing on API GW Stage.

Scheduling AWS Lambda Provisioned Concurrency

For our project purposes, we found that spikes are usually during the day, from 10 am until 8 pm, and there is no need for provisioned worker nodes during the night. That’s why we decided to use Application Auto Scaling service to automate scaling for Provisioned Concurrency for Lambda. There is no extra cost for Application Auto Scaling, you only pay for the resources that you use.

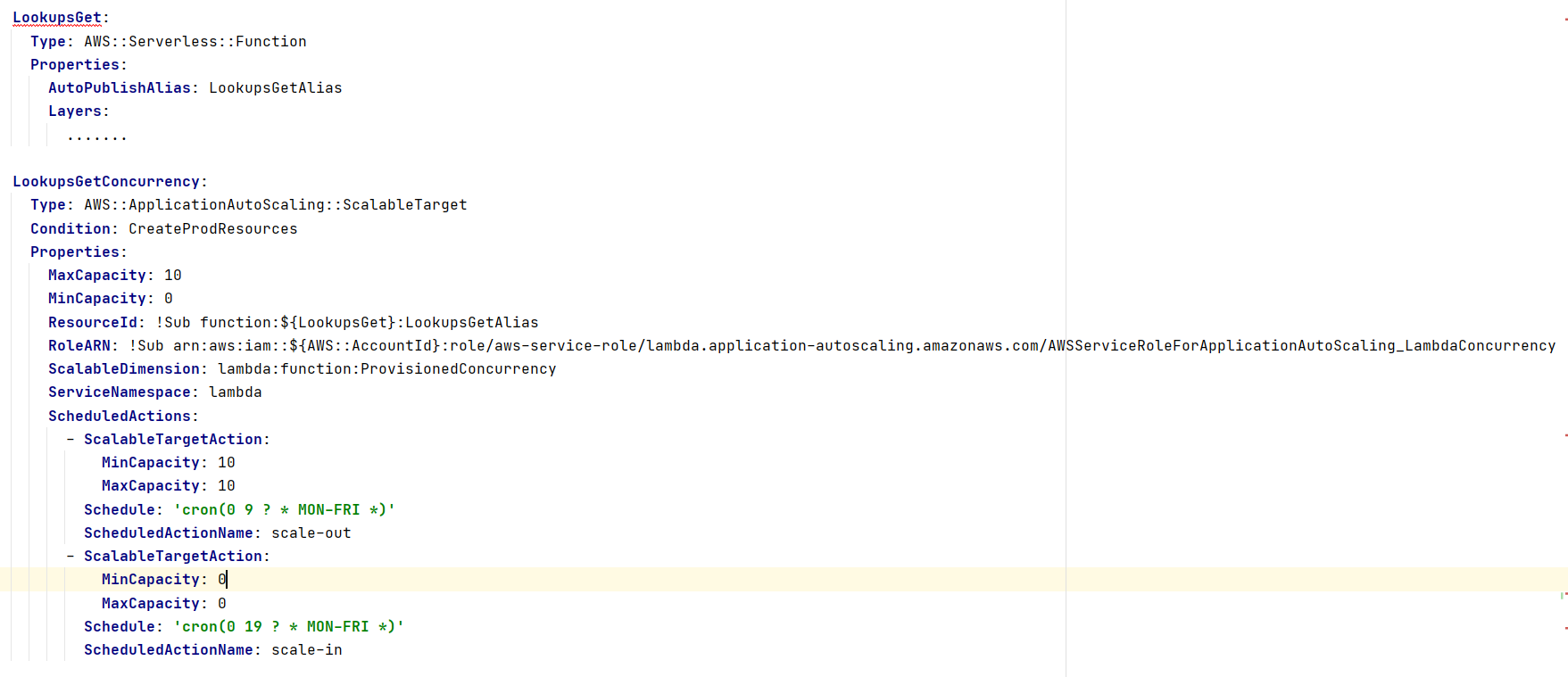

We are using AWS SAM to schedule AWS Lambda Provisioned Concurrency and to deploy our application. The following code shows how to schedule Provisioned Concurrency for production environment in an AWS SAM template:

In this template:

- You need an alias for the Lambda function. This automatically creates the alias “LookupsGetAlias” and sets it to the latest version of the Lambda function.

- This creates an AWS::ApplicationAutoScaling::ScalableTarget resource to register the Lambda function as a scalable target.

- This references the correct version of the Lambda function by using the “LookupsGetAlias” alias.

- Defines different actions to schedule as a property of the scalable target.

- You cannot define the scalable target until the alias of the function is published. The syntax is <FunctionResource>Alias<AliasName>.

Conclusion

The use of serverless services, as AWS Lambda function, and their downsides, affects the end-user experience. Cold starts impact your serverless application performance.

Provisioned Concurrency for AWS Lambda function helps to take greater control over the performance and reduce latency in creation of execution environments. In combination with Application Auto Scaling, you can easily schedule your scaling during application usage peaks, and optimize cost.

Enjoy your day and keep safe!