Common Challenges in Manual Software Testing and How to Overcome Them

Common Challenges in Manual Software Testing and How to Overcome Them

Manual software testing remains a cornerstone of the quality assurance process, offering human insight and adaptability cruical to ensuring reliable software products. This testing involves hands-on examination of software to ensure it meets user requirements without relying on automation tools. Testers independently develop and conduct test scenarios, constantly validating the software’s functionality against predefined criteria.

While manual testing has been a standard practice for some time, it struggles to keep up with the demands of Agile and Continuous Delivery methodologies. With frequent changes and updates, manually testing every aspect of the software becomes increasingly challenging. In this article, we’ll explore some of the most common challenges encountered by manual testers as well as the most effective strategies to overcome them.

Challenge: Time Limitations

Manual testing often means “juggling” project deadlines alongside comprehensive testing, which is a significant challenge. Testers frequently encounter time pressures that may result in insufficient test coverage or rushed testing, potentially compromising the product’s quality. Finding the right balance between “delivery on time” and “in-depth” testing requires effective time management and prioritization strategies.

Solution:

Prioritize Testing Scenarios

Firstly, prioritizing testing scenarios is crucial in manual testing, especially when time is tight. By identifying and focusing on critical test scenarios vital to the application’s functionality and the potential risks, testers ensure that essential areas get the coverage they need within a limited time. This smart approach allows for more efficient use of resources and provides maximum testing coverage despite time constraints.

Risk-Based Testing Methodologies

Using risk-based testing methodologies is another smart move when time is limited for manual testing. By evaluating tests based on their potential impact on the application and business goals, testers can manage time constraints effectively while still covering high-risk areas. This approach helps testers concentrate on areas with the most significant risk and optimize testing efforts to address potential issues within the available time.

Planning and Scheduling

Sometimes creating on-point test plans and schedules can be essential for navigating time limitations in manual testing. By outlining testing objectives, scope and timelines, testers establish a roadmap for their testing activities, ensuring that efforts are coordinated and efficient. Setting specific milestones and regularly reviewing the test plan allows for ongoing progress monitoring and adjustments to optimize testing within the given time frame.

Optimization

Optimizing test execution is vital to maximizing testing coverage and efficiency within time constraints in manual testing. Strategies such as the following can help streamline the execution process and maximize the available time:

- grouping related tests

- minimizing setup and teardown time

- using parallel testing

These tactics speed-up testing, reduce unnecessary steps, and ensure testing efforts are focused on achieving desired outcomes within the time limits.

Challenge: Test Case Management

Managing a large volume of test cases manually can be overwhelming and time-consuming. Challenges arise in organizing, updating and tracking these cases, resulting in inconsistencies and inefficiencies across the testing process. This issue becomes particularly noticeable during the Regression phase, where it can cause confusion regarding requirements that sync with the test cases and lead to execution delays.

Solution:

Adopting a simplified approach to writing test cases is crucial for maintaining agility and efficiency in dynamic projects characterized by constant changes and tight deadlines. Utilizing test outlines with a “Given-When-Then” syntax can streamline the process, providing a clear structure that allows quick adaptation to change without requiring extensive rewriting of traditional test cases. Also, using standardized test case templates boosts this method by offering ready-made frameworks for typical scenarios or functions. This helps testers easily access structured test cases and ensures broader coverage of required changes, making testing smoother in dynamic projects.

Furthermore, allocating specific time for reviewing and updating existing test cases is essential during task breakdown. When requirement alterations occur late in a sprint, testers may focus on running as many tests as possible within the available time frame.

When the requirements change at the last minute, it’s important to let everyone know that we may not be able to thoroughly test everything before the deadline. By being upfront about this challenge, the QAs ensure that everyone understands the situation at the end of the sprint. This helps developers and stakeholders know what to expect and allows them to set realistic timelines for testing. Clear communication helps everyone work together to handle these changes effectively.

Challenge: Repetitive Tasks

Manual testers often encounter the challenge of repeatedly executing the same test cases across various iterations or releases of a software product. This continuous repetition not only leads to a sense of monotony but also contributes to decreased productivity over time. Moreover, the repetitive nature of executing identical test cases increases the likelihood of overlooking potential defects in the system. This repetitive cycle can decrease testers’ enthusiasm and engagement, which can then impact the overall process and potentially lead to uncaught bugs into the software.

Solution:

To address the repetitive tasks that come with manual testing, one of the best strategies is to automate the execution of test scenarios that repeat frequently and cover the essential functions of the software. This means identifying the test cases that remain stable, repeat often, and don’t change much across different versions or updates of the software. By pinpointing these consistent and repetitive test scenarios, teams can leverage automation tools to streamline their testing processes. Automating these tests reduces the need for manual effort and ensures that tests are carried out consistently and reliably every time.

Moreover, automating repetitive tasks allows manual testers to focus their time and energy on more challenging and exploratory testing activities. This shift in focus not only improves the productivity of manual testers but also enhances the overall efficiency and effectiveness of the testing process. By reallocating resources from repetitive tasks to more complex testing endeavors, teams can uncover potential issues and areas for improvement that may have otherwise gone unnoticed.

Overall, by embracing automation for repetitive tasks, manual testing teams can unlock new levels of efficiency, productivity, and quality in their testing efforts.

Challenge: Communication and Collaboration

Effective communication and teamwork are absolutely vital in manual testing. Testers act as the bridge, linking developers, business analysts, and all other project participants. That’s why being on the same page is crucial. However, things aren’t always as smooth as they seem. With methodologies prone to constant change, important details can easily slip away. When everyone’s focused on different tasks, misunderstandings and misinformation can quickly arise.

Solution:

Collaboration between developers and QAs is crucial to enhancing testing effectiveness. By sharing knowledge with testers from the outset of development, developers empower them to make informed decisions about test prioritization, ensuring software quality and functionality. This collaboration streamlines the software development process, making it more effective and time efficient. A strategic approach involves:

- Scheduling additional meetings whenever new changes occur or additional questions arise from the requirements.

- Updating requirements in User Stories after each sync centralizes information and ensures everyone is on the same page.

Establishing new communication channels improves transparency, keeping all stakeholders informed and aligned with project developments. Empowering testers to make informed decisions not only benefits developers but also ensures comprehensive testing, making software components ready for deployment after each sprint. This approach not only eases the workload for QA testers but also guarantees that software products meet business requirements and function optimally.

Technical Challenges:

In manual testing, in addition to functionality testing, there are many technical challenges on a daily basis. Testers need to ensure that the software works well on different levels. The software should be checked to see how it performs under different conditions, such as:

- Various scenarios when a large number of people use the application at once (performance testing).

- Keeping the software safe from hackers (security testing).

- Ensuring the software works on various devices and systems (compatibility testing).

Solution:

Exploring the world of testing tools can be daunting, but it’s okay to take it one step at a time. Testers shouldn’t feel pressured to become experts overnight in every field of software testing. Mastery comes with practice and experience.

Start by familiarizing yourself with the basics of the tools you’ll be using. Reach out to colleagues who have more knowledge in the fields you are interested in so they can give you guidance and tips. They’ve been where you are and can offer valuable insights. It’s also beneficial to prioritize your learning objectives. Decide what areas of testing you want to focus on first and gradually expand your knowledge from there.

Once you feel comfortable with the knowledge level you have gained, try to incorporate it into your everyday work. Also, collaborating with your QA peers by splitting tasks between different testing types can provide better exposure to various aspects of testing, allowing you to build your skillset and gain a deeper understanding of the field.

Conclusion

Manual testing plays a vital role in ensuring the quality and reliability of software products, yet it has its challenges. These challenges merely scratch the surface, as testers encounter many more in their day-to-day work. By recognizing and addressing these common challenges, testers can enhance the effectiveness and efficiency of their testing efforts. It’s essential for testers to stay updated on the latest trends and approaches in the testing world to tackle common problems with fresh solutions. Don’t be afraid to try new things and step out of your comfort zone.

Author

Boris Kochov

Quality Assurance Engineer

Intel’s Stand-Alone FPGA Company’s Name Revived as Intel Altera

Intel's FPGA Business Revives as Altera: A Strategic Spin-Off Unveiled

In a pivotal move for the semiconductor industry, Intel has breathed new life into its FPGA business by rebranding it as Altera. This development comes just two months after Intel spun off its Programmable Solutions Group into a stand-alone company, marking a significant shift in its strategic direction. With Altera rebranding, Intel signals a renewed focus on the FPGA market and its potential for growth and innovation.

A Return to Roots: The Altera Rebranding

The decision to revert to the original Altera name underscores Intel's commitment to revitalizing its FPGA business and positioning it for future growth. With plans underway to attract private investment, Intel aims to pave the way for Altera's potential return to the public market by 2026. Embracing its heritage, Altera aims to build upon its legacy of innovation while forging new pathways in the FPGA landscape.

Leadership for a New Era: Meet the Team

Leading the charge at Altera are seasoned Intel veterans Sandra Rivera, appointed as CEO, and Shannon Poulin, serving as COO. Their extensive experience within Intel augurs well for Altera's prospects as it embarks on this new chapter as an independent entity. With their leadership, Altera is poised to navigate the complexities of the semiconductor industry while driving forward with bold and strategic initiatives.

Strategic Imperatives: Driving Growth and Expansion

The spin-off of Altera serves a dual purpose for Intel. Not only does it provide the company with additional liquidity to fuel CEO Pat Gelsinger's ambitious comeback plan, but it also unlocks new avenues for business expansion. By targeting markets such as industrial, automotive, and aerospace and defense, Altera aims to diversify its revenue streams and capitalize on previously untapped opportunities. Through strategic partnerships and innovative solutions, Altera seeks to position itself as a key player in emerging markets and industries.

Product Focus: Innovating for Tomorrow's Needs

Crucially, the move positions Altera to focus on developing low-end and midrange FPGA products, addressing concerns around affordability and accessibility. This strategic shift is poised to democratize the use of FPGAs, empowering more companies to leverage their capabilities in system development. Altera's product lineup, including the Agilex 9 FPGA boasting industry-leading data converters, underscores its commitment to innovation and meeting the evolving needs of customers. With a relentless focus on R&D,

Altera is poised to deliver cutting-edge solutions that push the boundaries of what is possible in the FPGA market.

Expert Analysis: Assessing the Potential

Expert analysis underscores the potential benefits of Intel's strategic spin-off of Altera. By unlocking new growth opportunities and leveraging experienced leadership, the move sets the stage for both entities to thrive independently. However, success will hinge on factors such as market response and the effective execution of planned initiatives. As Altera charts its course as an independent entity, it must remain agile and adaptable in the face of evolving market dynamics and technological advancements.

Looking Ahead: Towards a New Era of Innovation

Looking ahead, the separation of Altera from Intel could pave the way for increased private investment and potentially influence similar strategic moves within the semiconductor industry. As Altera charts its course as an independent company, the stage is set for a new era of innovation and growth in the FPGA market. With a clear vision and strategic roadmap in place, Altera is poised to capitalize on emerging opportunities and cement its position as a leader in the field of programmable solutions.

What is GitHub Copilot in the Test Automation World?

What is GitHub Copilot in the Test Automation World?

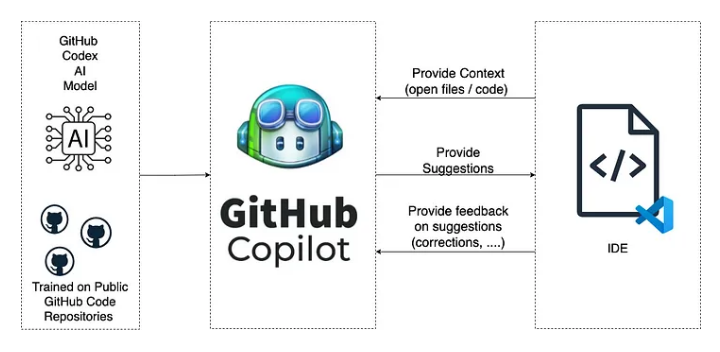

Test automation, a foundational element of contemporary software development practices, is critical for ensuring the reliability, functionality, and satisfaction of software users. As software becomes increasingly complex and the pressure for quicker development cycles mounts, the quest for inventive solutions to streamline the test automation process is more urgent than ever. This is where GitHub Copilot, born from a partnership between GitHub and OpenAI, comes into play, aiming to transform the way developers write, test, and maintain their code.

More than just another addition to the developer’s toolkit, GitHub Copilot is an AI-driven companion that adds a whole new layer to the coding and testing process. With its ability to interpret natural language and grasp the context of code, Copilot provides instant suggestions for completing code lines or even whole functions. This capability significantly cuts down on the time and effort needed to create solid test scripts and improve code quality.

This article sets out to explore the synergy between GitHub Copilot and test automation deeply. We’ll examine how this AI-enhanced tool is pushing the limits of automated testing by facilitating the generation of detailed test cases, refining existing test scripts, and guiding users toward best practices. GitHub Copilot emerges as a beacon of innovation, promising to speed up the development timeline while maintaining high standards of quality and reliability in software products.

Through our journey into the capabilities and applications of GitHub Copilot in test automation, we’ll discover its remarkable potential to transform. By the conclusion, the impact of GitHub Copilot in boosting test coverage, accelerating development cycles, and promoting an ethos of continuous learning and enhancement will be undeniable. Let’s embark on this exploration together, to see how GitHub Copilot is not merely influencing the future of test automation but reshaping the very landscape of software development.

What’s this about?

GitHub Copilot, in the context of test automation, refers to the application of GitHub's AI-powered tool, Copilot, to assist in writing, generating, and optimizing test code and scripts for software testing. GitHub Copilot is an AI pair programmer that suggests code and functions based on the work's context, making it a powerful tool for accelerating development and testing processes. When applied to Test Automation, it offers several benefits:

Code Generation for Test Scripts – GitHub Copilot can generate test cases or scripts based on your descriptions. This includes unit tests, integration tests, and end-to-end tests, helping to cover various aspects of application functionality.

Improving Test Coverage – By suggesting tests that you might not have considered, Copilot can help improve the coverage of your test suite, potentially catching bugs and issues that would have otherwise been missed.

Accelerating Test Development – Copilot can significantly reduce the time required to write test scripts by suggesting code snippets and complete functions. This can be particularly useful in Agile and DevOps environments where speed and efficiency are critical.

Learning Best Practices – For QA engineers, mainly those new to automation or specific languages/frameworks, Copilot can serve as a learning tool, suggesting best practices and demonstrating how to implement various testing patterns.

Refactoring and Optimization – Copilot can suggest improvements to existing test code, helping to refactor and optimize test scripts for better performance and maintainability.

However, while GitHub Copilot can be a valuable tool in Test Automation, it’s important to remember that it’s an aid, not a replacement for human oversight. The code and tests it generates should be reviewed for relevance, correctness, and efficiency. Copilot’s suggestions are based on the vast amount of code available on GitHub, so ensuring that the generated code complies with your project’s specific requirements and standards is crucial.

To effectively use GitHub Copilot in automation testing, you should:

- Clearly articulate what you’re testing through descriptive function names, comments, or both.

- Review the suggested code carefully to ensure it meets your testing standards and correctly covers the test scenarios you aim for.

- Be open to refining and editing the suggested code, as Copilot’s suggestions might not always be perfect or fully optimized for your specific use case.

Despite its capabilities, GitHub Copilot is a tool to augment the developer’s workflow, not replace it. It is crucial to understand the logic behind the suggested code and ensure it aligns with your testing requirements and best practices.

GitHub Copilot and Playwright

GitHub Copilot’s integration with test automation, particularly with frameworks like Playwright, exemplifies how AI can streamline the development of robust, end-to-end automated tests. Playwright is an open-source framework by Microsoft for testing web applications across different browsers. It provides capabilities for browser automation, allowing tests to mimic user interactions with web applications seamlessly. When combined with GitHub Copilot, developers and QA engineers can leverage the following benefits:

Code Generation for Playwright Tests

Automated Suggestions: Copilot can suggest complete scenarios based on your descriptions or partial code when you start writing a Playwright test. For example, if you’re testing a login feature, Copilot might suggest code for navigating to the login page, entering credentials, and verifying the login success.

Custom Functionality: Copilot can assist in writing custom helper functions or utilities specific to your application’s testing needs, such as data setup or teardown, making your Playwright tests more efficient and maintainable.

Enhancing Test Coverage

Scenario Exploration: Copilot can suggest test scenarios you might not have considered, thereby improving the breadth and depth of your test coverage. You ensure a more robust application by covering more edge cases and user paths.

Dynamic Test Data: It can help generate code for handling dynamic test data, which is crucial for making your tests more flexible and less prone to breakage due to data changes.

Speeding Up Test Development

Rapid Prototyping: With Copilot, you can quickly prototype tests for new features, getting instant feedback on your test logic and syntax, accelerating the test development cycle.

Learning Playwright Features: For those new to Playwright, Copilot serves as an on-the-fly guide, suggesting usage patterns and demonstrating API capabilities, thus flattening the learning curve.

Best Practices and Refactoring

Adherence to Best Practices: Copilot can suggest best practices for writing Playwright tests, such as using page objects or implementing proper wait strategies, helping you write more reliable tests.

Code Optimization: It can offer suggestions to refactor and optimize existing tests, improving their readability and performance.

Continuous Learning and Adaptation

As you use GitHub Copilot, it adapts to your coding style and preferences, making its suggestions more tailored and valuable.

Usage Example

Imagine writing a Playwright test to verify that a user can successfully search for a product in an e-commerce application. You might start by describing the test in a comment or writing the initial setup code. Copilot could then suggest the complete code for navigating to the search page, entering a search query, executing the search, and asserting that the expected product results appear.

Limitations and Considerations

While GitHub Copilot can significantly enhance the process of writing Playwright tests, it’s essential to:

Review and Test the Suggestions: Ensure the suggested code accurately meets your test requirements and behaves as expected.

Understand the Code: Rely on Copilot for suggestions but understand the logic behind the code to maintain and debug tests effectively.

In summary, GitHub Copilot can be a powerful ally in automating web testing with Playwright, offering speed and efficiency improvements while also helping to ensure comprehensive test coverage and adherence to best practices.

What data does GitHub Copilot collect?

GitHub Copilot, developed by GitHub in collaboration with OpenAI, functions by leveraging a vast corpus of public code to provide coding suggestions in real-time. Privacy and data handling concerns are paramount, especially when integrating such a tool into development workflows. Here is a general overview of the types of data GitHub Copilot may collect and use:

User Input – Copilot collects the code you are working on in your editor to provide relevant suggestions. This input is necessary for the AI to understand the context of your programming task and generate appropriate code completions or solutions.

Code Suggestions – The tool also records the suggestions it offers and whether they are accepted, ignored, or modified by the user. This data helps refine and improve the quality and relevance of future suggestions.

Telemetry Data – Like many integrated development tools, GitHub Copilot may collect telemetry data about how the software is used. This can include metrics on usage patterns, performance statistics, and information about the software environment (e.g., editor version, programming language). This data helps GitHub understand how Copilot is used and identify areas for improvement.

Feedback – Users have the option to provide feedback directly within the tool. This feedback, which may include code snippets and the user’s comments, is valuable for improving Copilot’s accuracy and functionality.

Privacy and Security Measures

GitHub has implemented several privacy and security measures to protect user data and ensure compliance with data protection regulations:

Data Anonymization: To protect privacy, GitHub attempts to anonymize the data collected, removing any personally identifiable information (PII) from the code snippets or user feedback before processing or storage.

Data Usage: The data collected is primarily used to improve Copilot’s algorithms and performance, ensuring that the tool becomes more effective and relevant to the user’s needs over time.

Compliance with GDPR and Other Regulations: GitHub adheres to the General Data Protection Regulation (GDPR) and other relevant privacy laws and regulations, providing users with rights over their data, including the right to access, rectify, and erase their data.

User Control and Transparency

Opt-out Options: Users concerned about privacy can limit data collection. For instance, telemetry data collection can often be disabled through settings in the IDE or editor extension.

Transparency: GitHub provides documentation and resources to help users understand what data is collected, how it is used, and how to exercise their privacy rights.

Users must review GitHub Copilot’s privacy policy and terms of use for the most current and detailed information regarding data collection, usage, and protection measures. As with any tool processing code or personal data, staying informed and adjusting settings to match individual and organizational privacy preferences is crucial.

Incorporate GitHub Copilot into the test automation project

Incorporating GitHub Copilot into an automation project involves leveraging its capabilities to speed up the writing of automation scripts, enhance test coverage, and potentially introduce efficiency and innovation into your testing suite. Here’s a step-by-step approach to integrating GitHub Copilot into your automation workflow:

1. Install GitHub Copilot

First, ensure that GitHub Copilot is installed and set up in your code editor. GitHub Copilot is available for Visual Studio Code, one of the most popular editors for development and automation tasks.

Visual Studio Code Extension: Install the GitHub Copilot extension from the Visual Studio Code Marketplace.

Configuration: After installation, authenticate with your GitHub account to activate Copilot.

2. Start with Small Automation Tasks

Use GitHub Copilot on smaller, less critical parts of your automation project. This allows you to understand how Copilot generates code and its capabilities and limitations without risking significant project components.

Script Generation: Use Copilot to generate simple scripts or functions, such as data setup, cleanup tasks, or primary test cases.

Code Snippets: Leverage Copilot for code snippets that perform everyday automation tasks, such as logging in to a web application or navigating through menus.

3. Expand to More Complex Automation Scenarios

As you become more comfortable with Copilot’s suggestions and capabilities, use it for more complex automation scenarios.

Test Case Generation: Describe complex test scenarios in comments and let Copilot suggest entire test cases. This can include positive, negative, and edge-case scenarios.

Framework-Specific Suggestions: Utilize Copilot’s understanding of various testing frameworks (e.g., Selenium, Playwright, Jest) to generate framework-specific code, ensuring your tests are not only functional but also adhere to best practices.

4. Optimize Existing Automation Code

Copilot can assist in refactoring and optimizing your existing automation codebase.

Code Refactoring: Use Copilot to suggest improvements to your existing automation scripts, making them more efficient or readable.

Identify and Fill Test Gaps: Copilot can help identify areas where your test coverage might be lacking by suggesting additional tests based on the existing code and project structure.

5. Continuous Learning and Improvement

GitHub Copilot is built on a machine-learning model that continuously evolves. Regularly using Copilot for different tasks lets you stay up-to-date with its capabilities.

Feedback Loop: Provide feedback on the suggestions made by Copilot. This helps improve Copilot and lets you learn new coding patterns and approaches.

6. Review and Quality Assurance

While GitHub Copilot can significantly speed up the development of automation scripts, it’s crucial to maintain a rigorous review process.

Code Review: Ensure that all code generated by Copilot is reviewed by a human for logic, efficiency, and adherence to project standards before being integrated into the project.

Testing: Subject Copilot-generated code to the same testing and quality assurance level as any other code in the project.

7. Collaboration and Sharing Best Practices

Share your experiences and best practices for using GitHub Copilot in automation projects with your team. Collaboration can lead to discovering new ways to utilize Copilot effectively.

Documentation: Document how Copilot has been used in the project, including any configurations, customizations, or specific practices that have proven effective.

Knowledge Sharing: Hold sessions to share insights and tips on leveraging Copilot for automation tasks, fostering a culture of innovation and continuous improvement.

Incorporating GitHub Copilot into your automation project can lead to significant productivity gains, enhanced test coverage, and faster development cycles. However, it's crucial to view Copilot as a tool to augment human expertise, not replace it. Properly integrated, GitHub Copilot can be a powerful ally in building and maintaining high-quality automation projects.

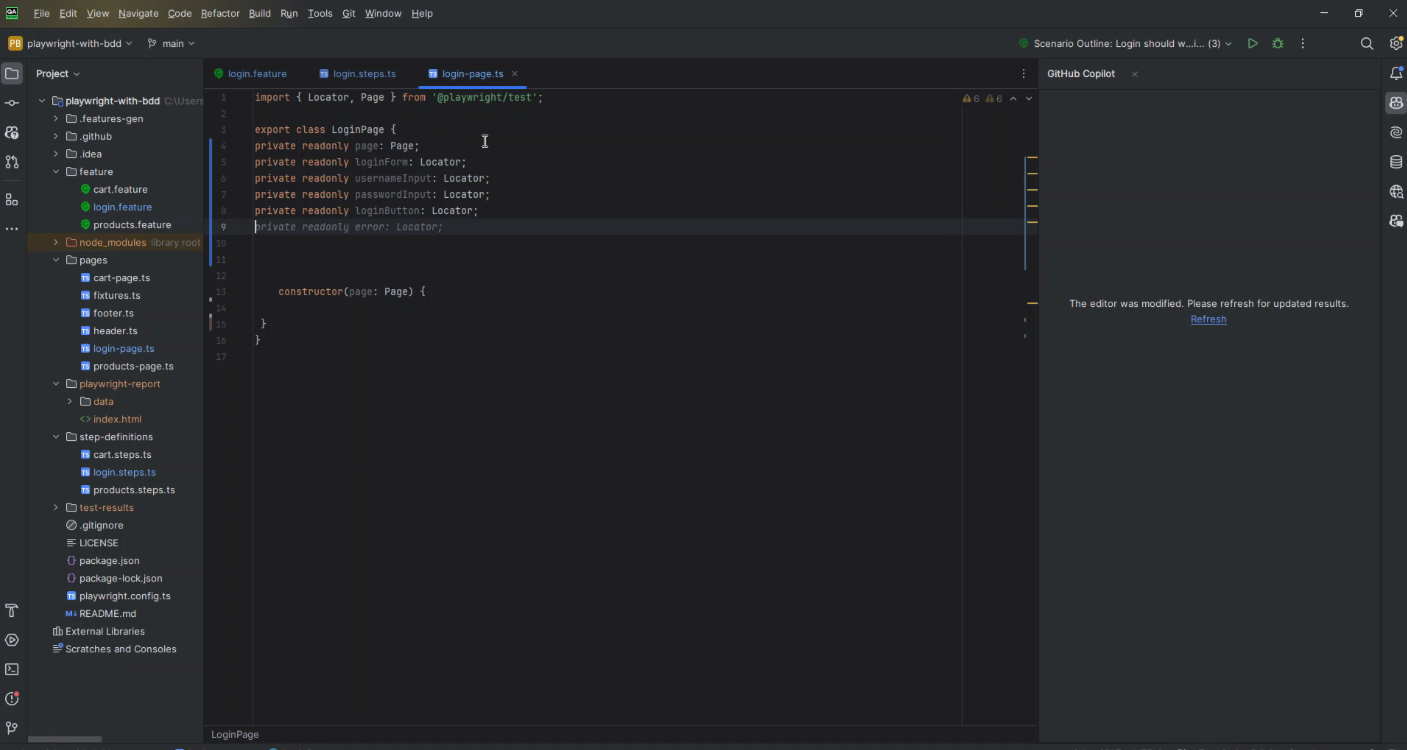

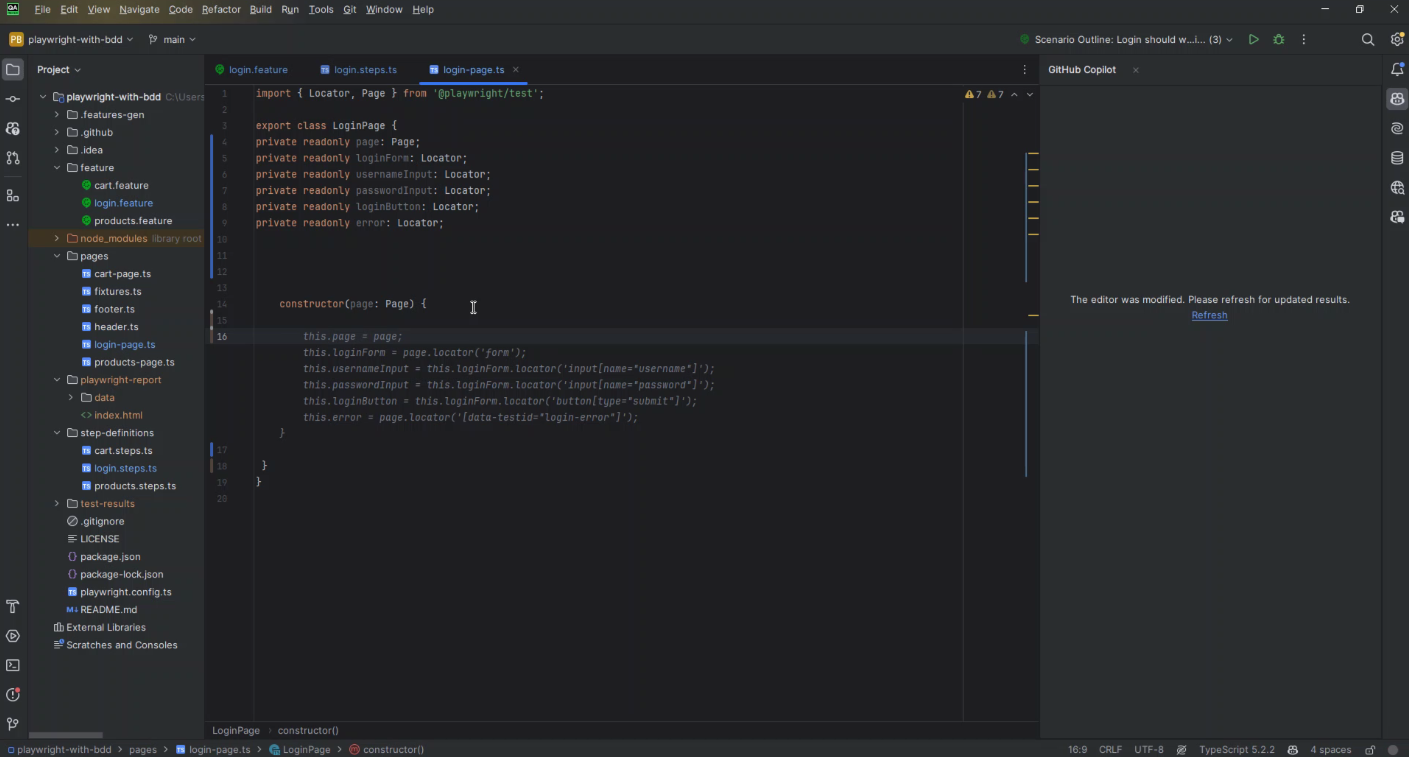

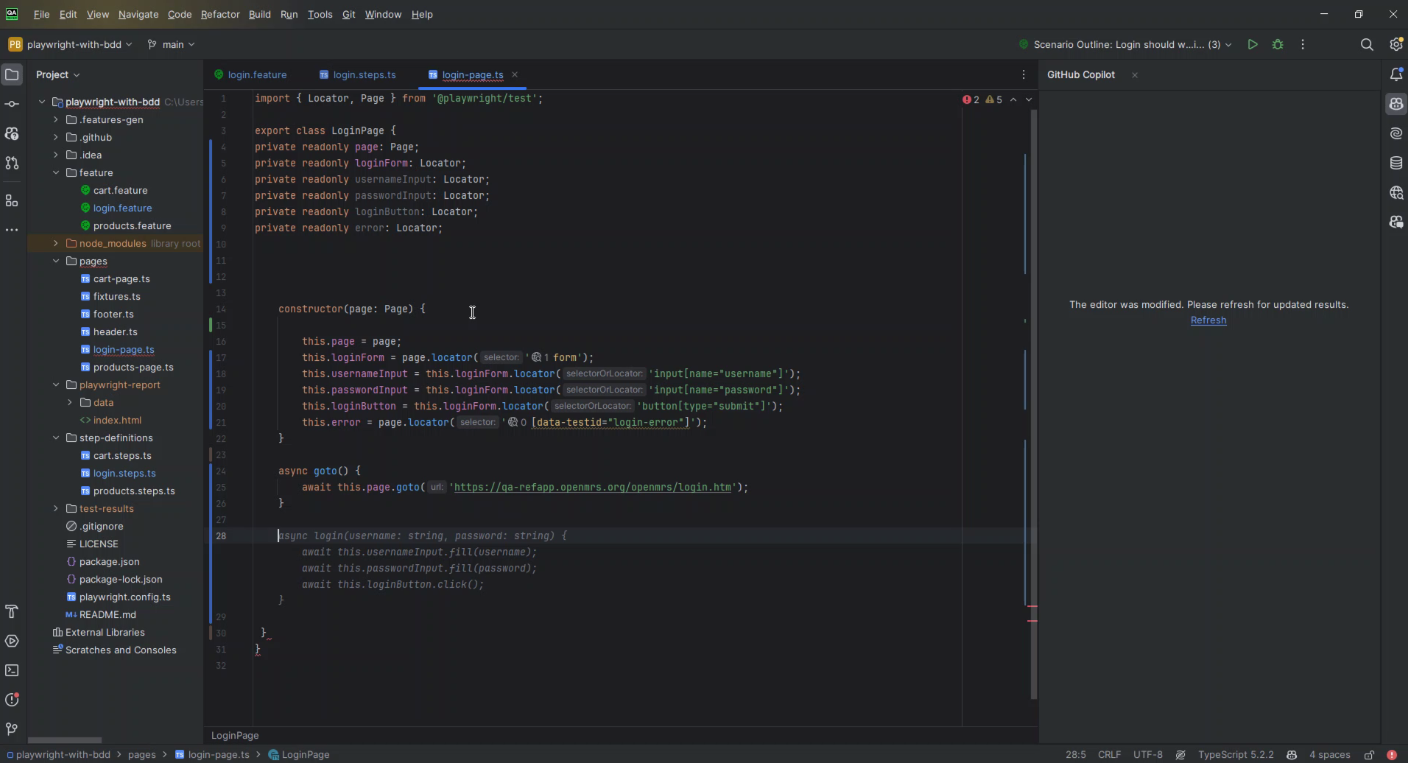

Example 1 – Adding Locators and Methods

Incorporating locators and methods into a page.ts file for a web automation project using tools like Playwright or Selenium can significantly benefit from the assistance of GitHub Copilot. This approach enables you to streamline the process of defining page objects and their corresponding methods, enhancing the maintainability and readability of your test scripts. Here is a step-by-step guide on how to do this with the help of GitHub Copilot:

Set Up Your Environment

Ensure that GitHub Copilot is installed and activated within your code editor, such as Visual Studio Code. Open your automation project and navigate to the page.ts file where you intend to add locators and methods.

Define Page Object Locators

Start by adding comments in your page.ts file describing the elements you must interact with on the web page. For example:

// Username input field

// Password input field

// Login button

Then, begin typing the code to define locators for these elements. GitHub Copilot should start suggesting completions based on your comments and the context of your existing code. For instance, if you’re using Playwright, it might offer something like:

Implement Page Methods

After defining the locators, you will want to add methods to interact with these elements. Again, start with a comment describing the action you want to perform, such as logging in:

// Method to log in

Start typing the method implementation and let GitHub Copilot suggest the method’s body based on your defined locators. For a login method, it might offer:

Refine Suggestions

GitHub Copilot’s suggestions are based on the patterns it has learned from a vast corpus of code, but they might not always be perfect for your specific use case. Review the suggestions carefully, making adjustments to ensure they meet your project’s requirements.

Test Your Page Object

Once you’ve added the locators and methods with the help of GitHub Copilot, it’s crucial to test them to ensure they work as expected. Write a test case that utilizes the new page object methods and run it to verify the interactions with the web page are correct.

Iterate and Expand

Add more locators and methods to your page objects as your project grows. GitHub Copilot can assist with this process, helping you generate the code needed for new page interactions quickly.

Share Knowledge

Share this knowledge with your team if you find GitHub Copilot helpful for specific tasks or discover best practices. Collaborative learning can help everyone make the most of the tool.

Example 3 – Analyzing codebase and multiple suggestions

When leveraging GitHub Copilot for analyzing a codebase and generating multiple suggestions in a test automation project, it's crucial to approach this with a strategy that maximizes the tool's capabilities while ensuring the quality and relevance of the suggestions. Here's a guide on how to effectively use GitHub Copilot for this purpose:

Understand Copilot’s Capabilities

First, recognize that GitHub Copilot generates suggestions based on the context of the code you’re working on and the comments you provide. It’s trained on a vast array of code from public repositories, making it a versatile tool for generating code across many languages and frameworks.

Provide Detailed Comments

Use detailed comments to describe the functionality you want to implement or the issue you’re trying to solve. GitHub Copilot uses these comments as a primary source of context. The more specific you are, the more accurate the suggestions.

– Example:

Instead of a vague comment like `// implement login test`, use something more descriptive like `// Test case: Ensure that a user can log in with valid credentials and is redirected to the dashboard`.

Break Down Complex Scenarios

For complex functionalities, break down the scenario into smaller, manageable pieces. This approach makes solving the problem easier and helps Copilot provide more focused and relevant suggestions.

– Example:

If you’re testing a multi-step form, describe and implement tests for each step individually before combining them into a comprehensive scenario.

Explore Multiple Suggestions

GitHub Copilot offers several suggestions for a given code or comment. Don’t settle for the first suggestion if it doesn’t perfectly fit your needs. You can cycle through different suggestions to find the one that best matches your requirements or inspires the best solution.

Refine and Iterate

Use the initial suggestions from Copilot as a starting point, then refine and iterate. Copilot’s suggestions might not always be perfect or fully optimized. It’s essential to review, test, and tweak the code to ensure it meets your standards and fulfils the test requirements.

Leverage Copilot for Learning

GitHub Copilot can be an excellent learning tool for unfamiliar libraries or frameworks. By analyzing how Copilot implements specific tests or functionalities, you can gain insights into best practices and more efficient ways to write your test scripts.

Collaborate and Review

Involve your team in reviewing Copilot’s suggestions. Collaborative review can help catch potential issues and foster knowledge sharing about efficient coding practices and solutions Copilot may have introduced.

Utilize Copilot for Boilerplate Code

Use GitHub Copilot to generate boilerplate code for your tests. This can save time and allow you to focus on the unique aspects of your test scenarios. Copilot is particularly good at generating setup and teardown routines, mock data, and standard test structures.

Continuous Learning and Adaptation

GitHub Copilot learns from the way developers use it. The more you interact with Copilot, providing feedback and corrections, the better it offers relevant suggestions. Engage with it as a dynamic tool that adapts to your coding style and preferences over time.

Ensure Quality Control

Always remember that you and your team are responsible for the code’s quality and functionality. Use GitHub Copilot as an assistant, but ensure that all generated code is thoroughly reviewed and tested according to your project’s standards.

Following these strategies, you can effectively leverage GitHub Copilot to analyze your codebase and generate multiple relevant suggestions for your test automation project. Copilot can significantly speed development, enhance learning, and introduce innovative solutions to complex problems. However, it’s crucial to remain engaged in the process, critically evaluating and refining the suggestions to ensure they meet your project’s specific needs and quality standards.

Example 4 – Generating Test Scenarios

Generating test scenarios with GitHub Copilot involves leveraging its AI-powered capabilities to brainstorm, refine, and implement detailed test cases for your software project. GitHub Copilot can assist in covering a broad spectrum of scenarios, including edge cases you might not have initially considered. Here’s how you can effectively use GitHub Copilot for generating test scenarios:

Start with Clear Descriptions

Begin by providing GitHub Copilot with explicit, descriptive comments about the functionality or feature you want to test. The more detailed and specific your description, the better Copilot can generate relevant test scenarios.

– Example:

Instead of simply commenting `// test login`, elaborate with details such as `// Test scenario: Verify that a user can successfully log in with valid credentials and is redirected to the dashboard. Include checks for incorrect passwords and empty fields.`

Utilize Assertions

Specify what outcomes or assertions should be tested for each scenario. Copilot can suggest various assertions based on the context you provide, helping ensure your tests are thorough.

– Example:

`// Ensure the login button is disabled when the password field is empty.`

Explore Edge Cases

Ask Copilot to suggest edge cases or less common scenarios that might not be immediately obvious. This can significantly enhance your test coverage by including scenarios that could lead to unexpected behaviors.

– Example:

`// Generate test cases for edge cases in user registration, such as unusual email formats and maximum input lengths.`

Generate Data-Driven Tests

Data-driven testing is crucial for covering a wide range of input scenarios. Prompt Copilot to generate templates or code snippets for implementing data-driven tests.

– Example:

`// Create a data-driven test for the search functionality, testing various input strings, including special characters.`

Implement Parameterized Tests

Parameterized tests allow you to run the same test with different inputs. Use Copilot to help write parameterized tests for more efficient scenario coverage.

– Example:

`// Write a parameterized test for the password reset feature using different types of email addresses.`

Leverage Copilot for Continuous Integration (CI) Tests

If your project uses CI/CD pipelines, Copilot can suggest scenarios specifically designed to be executed in CI environments, focusing on automation and efficiency.

– Example:

`// Suggest test scenarios for CI pipeline that cover critical user flows, ensuring quick execution and minimal flakiness.`

Refine Suggestions

Review the test scenarios and code snippets suggested by Copilot. While Copilot can provide a strong starting point, refining these suggestions to fit your project’s specific context and standards is crucial.

Iterate and Expand

Use the initial set of scenarios as a foundation, and continuously iterate by adding new scenarios as your project evolves. GitHub Copilot can assist with this ongoing process, helping identify new testing areas as features are added or modified.

Collaborate with Your Team

Share the generated scenarios with your team for feedback. Collaborative review can help ensure the scenarios are comprehensive and aligned with the project goals. GitHub Copilot can facilitate brainstorming sessions, making the process more efficient and thorough.

Document Your Test Scenarios

Finally, document the generated test scenarios for future reference and new team members. GitHub Copilot can even assist in developing documentation comments and descriptions for your test cases.

GitHub Copilot can significantly streamline the process of generating test scenarios, from everyday use to complex edge cases. By providing detailed descriptions and leveraging Copilot’s suggestions, you can enhance your test coverage and ensure your software is robust against various inputs and conditions. Remember, the effectiveness of these scenarios depends on your engagement with Copilot’s suggestions and your willingness to refine and iterate on them.

Conclusion: Redefining Test Automation's Horizon

Integrating advanced automation tools marks a pivotal moment in the evolution of Test Automation, bringing about a significant broadening of capabilities and a redefinition of the quality assurance process. This progress represents not merely an advancement in automation technology but a stride towards a future where QA is seamlessly integrated with creativity, insight, and a deep commitment to enhancing user experience. In this future landscape, QA professionals evolve from their conventional role of bug detectors to become creators of digital experiences, ensuring software is not only technically sound but also excels in delivering user satisfaction and upholding ethical standards.

Efficiency and Innovation as Core Pillars

The collaboration between advanced automation tools introduces an era marked by heightened efficiency, facilitating quicker release cycles without sacrificing output quality. This is made possible by automating mundane tasks, applying intelligent code and interface analyses, and employing predictive models to identify potential issues early. These technological advancements free QA engineers to use their skills in areas that benefit from human insight, such as enhancing user interface design and formulating strategic testing approaches.

Elevating Intuition and Creativity

Looking ahead, the QA process will transcend traditional test scripts and manual verification, becoming enriched by the intuitive and creative contributions of QA professionals. Supported by AI, these individuals are empowered to pursue innovative testing approaches, navigate the complexities of software quality on new levels, and craft inventive solutions to intricate problems. This envisioned future places a premium on the unique human ability to blend technical knowledge with creative thinking, pushing the boundaries of what is possible in quality assurance.

Achieving Excellence in Software Experiences

The primary goal of delivering outstanding software experiences becomes increasingly feasible. This ambition extends beyond merely identifying defects sooner or automating testing procedures; it encompasses a comprehensive understanding of user requirements, ensuring software is accessible and inclusive, and fostering trust through robust privacy and security measures. Test Automation emerges as a crucial element in the strategic development of software, significantly impacting design and functionality to fulfil and surpass user expectations.

Transformation of QA Engineers’ Roles

As the industry progresses toward this enriched future, the transformation in the role of QA engineers signifies a more comprehensive evolution within the software development field. They are more significant in decision-making processes, affecting product design and strategic orientation. Their expertise is sought not only for verifying software’s technical accuracy but also for their deep understanding of user behavior, preferences, and requirements.

Navigating the Path Forward

Realizing this vision involves overcoming obstacles, necessitating ongoing innovation, education, and adaptability. The benefits of pursuing this path are substantial. By adopting advanced technologies and methodologies, QA teams can refine their processes and contribute to developing digital products that genuinely connect with and satisfy users. Successful navigation of this path relies on interdisciplinary collaboration, continuous learning, and a dedication to achieving excellence.

Elevating Human Experience Through Technology

Ultimately, this envisioned future of Test Automation represents a broader industry call to utilize technology for efficiency and profit and as a vehicle for enhancing the human condition. In this imagined future, technology produces functional, dependable, enjoyable, and inclusive software aligned with our collective values and ethics. Integrating advanced tools and methodologies signifies the initial steps toward a future where quality assurance is integral to developing software that positively impacts lives and society.

Author

Jagoda Peric

Quality Assurance Engineer

Podcast: Navigating the Responsible Development of LLMs with Vijay Karunamurthy

Navigating the Responsible Development of LLMs with Vijay Karunamurthy

Demystifying the Path to Trustworthy AI

Vijay Karunamurthy, Field CTO at Scale AI, joins CTO Confessions to discuss his career in AI and the future of generative models. Vijay shares lessons from early neural network research and his time at YouTube, where he helped build recommendation systems. He explains his role translating customer needs to product roadmaps and ensuring ethical, transparent AI development.

The conversation explores AI’s potential to enhance understanding of complex issues through multimodal interfaces. Vijay offers advice on entrepreneurship and gaining new perspectives through direct conversations.

“AI will change the world, but not so much the fundamentals that shape how software development is done.“

–Vijay Karunamurthy–

- Fine-tuning AI models for real-time use cases like robots and self-driving cars will democratize access to AI.

- Leveraging direct conversations with customers, researchers, and experts will help you gain new perspectives and challenge assumptions.

- Testing is paramount as it will determine how safe, usable, and effective AI will be in the future.

TLDR: Straight to the point

(Quick Links)

Introducing the guest Tamás Petrovics

The journey to becoming a tech leader

What is the problem that XUND is solving?

How Tamás roles as a leader

As a leader, what keeps Tamás up at night?

How to create the best performing teams

Engineering challenges

Book recommendations by Tamás

Tamás’ wish to the tech genie

Tamás’ key takeaways for aspiring tech leaders

TC’s key takeaways from the podcast

Career Advancement Opportunities

Career Advancement Opportunities

At IT Labs, we’re not just about the cutting-edge technology we create; we’re also deeply committed to the continuous growth and development of our team. That’s why we created the IT Labs Wisdom Hub – a collection of thoughtfully and professionally designed online training programs, continuously improved and encompassing topics from technical to soft skills, optimized for self-paced learning and carefully crafted to match the needs of our colleagues. Through our IT Labs Wisdom Hub, we offer an unparalleled opportunity for professional and personal advancement, all designed with your future in mind. Check out the main categories:

Your Growth is Our Priority

At IT Labs, the will to grow, learn, and improve is a priority and requirement – and that’s exactly why we’ve made it our number one goal to invest in people’s careers, skills, and futures - and help them fulfil their potential and professional goals. The most important task our employees have is continuous learning and just as they contribute to the success of IT Labs, we support them in every step of their journey.

What are the Top 5 CTO Challenges in Leading Teams – Part 5

What are the Top 5 CTO Challenges in Leading Teams – Part 5

By TC Gill

Welcome to Part 5 of our series on the Top 5 CTO Challenges in Leading Teams. In this installment, we delve into a critical aspect of tech leadership: budget management. In today’s tech landscape, where technology functions are not just cost centers but integral to business success, effective budget management is paramount. Join us as we explore the challenges CTOs face in budget management and discover strategies to navigate them successfully.

The Challenge of Budget Management

In the realm of technology leadership, effective budget management encompasses far more than mere financial oversight. It represents a strategic endeavor marked by a series of intricate challenges that CTOs face in their pursuit of fostering innovation while ensuring operational efficiency. These challenges are multifaceted, reflecting the dynamic interplay between forecasting accuracy, balancing innovation with operational necessities, and managing stakeholder expectations within the rapidly evolving technology landscape.

Forecasting Accuracy and Unpredictability

One of the most significant hurdles in budget management is the ability to forecast future financial needs with a high degree of accuracy. The tech industry is known for its rapid pace of change, with new technologies emerging and market demands evolving at an unprecedented rate. This unpredictability makes it challenging for CTOs to anticipate expenses accurately, often leading to discrepancies between forecasted and actual spending. The task is further complicated by the need to stay ahead of technological advancements, requiring allocations for new investments that were not part of the original budget planning. This scenario demands a flexible approach to budgeting, one that allows for quick adjustments in response to technological shifts without jeopardizing the financial stability of ongoing projects.

Balancing Innovation with Operational Necessities

Another critical challenge is the allocation of resources between innovative projects and the maintenance of existing operations. CTOs are tasked with driving growth through innovation while ensuring the reliability and efficiency of current systems. This balancing act is complicated by the competing demands of projects, each vying for a share of the budget based on their potential to contribute to the organization's strategic goals. The challenge lies in making informed decisions that prioritize projects not only on their immediate impact but also on their alignment with long-term objectives. This often involves tough choices, such as reallocating resources from popular initiatives to those that promise greater strategic value, all while maintaining operational excellence.

Managing Stakeholder Expectations

Compounding these challenges is the need to manage the expectations of a diverse group of stakeholders, including team members, executives, and external partners. Each group has its own set of priorities and expectations regarding budget allocations, making it difficult to achieve consensus. For instance, while the development team may push for increased funding for cutting-edge research, the finance department might emphasize cost reduction and efficiency. Navigating these differing priorities requires CTOs to engage in clear communication and consensus-building, striving to align budget allocations with the organization's overarching strategic goals. This involves making difficult decisions, negotiating compromises, and sometimes, advocating for budget adjustments to ensure both innovation and operational stability are adequately funded.

The Steps Needed for Effective Budget Management

In the fast-paced world of technology, Chief Technology Officers (CTOs) face the tricky balance of planning for the unknown and making every penny count. Gone are the days of set-it-and-forget-it budgets. Today, managing finances means being as nimble and innovative as the technologies we work with. We're diving into three key strategies that make budgeting less of a headache and more of a strategic advantage: Dynamic Budget Formulation and Adjustment, Prioritization and Resource Optimization, and Embracing Agile Financial Planning. These approaches not only help tackle the unpredictable nature of tech finance but also ensure that CTOs can keep their teams innovating, operations smooth, and stakeholders happy—all without breaking the bank. Let's explore how these strategies can transform budget woes into budget wins.

Dynamic Budget Formulation and Adjustment

To combat forecasting inaccuracies and the unpredictability of the tech landscape, adopting a dynamic approach to budget formulation and adjustment is crucial. This strategy involves regular review and realignment of the budget to reflect changing priorities and market conditions. By allowing for frequent adjustments, CTOs can ensure that financial planning remains flexible, responsive to technological shifts, and capable of accommodating new investments. This approach directly tackles the challenge of forecasting accuracy by instituting a framework that supports swift adaptation to unforeseen financial needs and opportunities.

Prioritization and Resource Optimization

Addressing the challenge of balancing innovation with operational necessities, the solution of prioritization and resource optimization is key. By employing a structured evaluation process for projects, such as the MoSCoW method, CTOs can identify and allocate resources to initiatives that align with the organization's strategic goals and offer the highest potential impact. This strategic prioritization ensures that limited resources are directed toward projects that not only promise significant returns but also maintain or enhance operational stability. This solution facilitates informed decision-making, enabling CTOs to navigate the delicate balance between fostering innovation and sustaining existing operations effectively.

Embracing Agile Financial Planning

To manage the complex interplay of stakeholder expectations, adopting an agile approach to financial planning offers a pathway to achieving consensus and aligning budget allocations with strategic objectives. Agile budgeting, characterized by its flexibility and iterative nature, allows for ongoing adjustments based on feedback from stakeholders and changes in business needs. This method promotes transparency and collaboration, encouraging input from various departments and ensuring that budget decisions support the overall vision of the organization. By fostering an environment where financial planning is adaptable and inclusive, CTOs can more effectively manage stakeholder expectations, negotiate compromises, and ensure that budget allocations reflect a shared understanding of the organization's priorities.

Conclusion

So, there you have it. What “I” think are the top five challenges. This is just from the perspectives of my lens and the conversations I’ve had with tech leaders. What are the topics that you would have listed in addition to these? I’d love to know. Drop me a line on tc.gill@it-labs.com if you feel there is a particular one that could be mentioned and unpacked.

So, what do you think? What do the challenges mentioned here mean to you? Did they get you thinking. How can you overcome these challenges? Is there something on the other side of them? An “opportunity” maybe! If you can get them out of the way.

Drop me a line and let me know your thoughts. In the meantime, keep growing and thriving.

Leadership is a ride… do your best to enjoy it.

Author

TC Gill – People Development Coach and Strategist

Podcast: Architecting Success: Andreas Westendorpf's Vision of Tech as Business Backbone

Architecting Success: Andreas Westendorpf's Vision of Tech as Business Backbone with Andreas Westendörpf

From Software Engineering to Sleep Science: The Unconventional Path of a Tech Visionary at Emma

In this insightful episode of CTO Confessions, listeners are treated to an in-depth exploration of technology and leadership through the experiences of Andreas Westendörpf, CTO of Emma. His journey, from a budding software engineer to an influential technology leader, is a central theme. The episode covers the crucial role of good architecture, with a focus on the MACH Alliance’s contributions to e-commerce. It delves into the significant impact of organizational structure on technology, advocating for empowered, interdisciplinary product teams. Additionally, the episode sheds light on topics like technical debt and the essence of software development, all while underscoring the necessity of empathy in the tech industry.

“Tech is actually the way how to find and exploit new revenue streams. Technology is not supporting, enabling or empowering business anymore. It IS business.”

- Empathy is Key in Bridging Technology and Business – Mutual understanding between technical and non-technical teams is crucial for effective collaboration and problem-solving.

- Organizational Dynamics Shape Software Development – The structure of an organization significantly influences its software architecture. Implementing effective team structures and interdisciplinary approaches can lead to more innovative and efficient product development.

- Continuous Learning and Community Engagement are Essential – Engaging with peer networks, podcasts, and tech blogs is vital for staying updated and tackling the complexities of technology leadership.

TLDR: Straight to the point

(Quick Links)

Introducing the guest Tamás Petrovics

The journey to becoming a tech leader

What is the problem that XUND is solving?

How Tamás roles as a leader

As a leader, what keeps Tamás up at night?

How to create the best performing teams

Engineering challenges

Book recommendations by Tamás

Tamás’ wish to the tech genie

Tamás’ key takeaways for aspiring tech leaders

TC’s key takeaways from the podcast

Martin, what in the Java World is Quarkus?

Martin, what in the Java World is Quarkus?

Welcome Java devs (or otherwise)! We had an interview with one of our Senior Back-end Engineers, Martin Trajkov, a skilled Java expert who sat down for a talk with us about Quarkus, to share !

Martin is not just any developer; he's an expert in making Java applications work great in the cloud and has lots of experience in this area. He knows how to explain complex ideas in simple ways, making learning about Quarkus fun and easy.

Martin Trajkov

Senior Back-end Engineer

Think of this interview as the pre-game before Martin guiding us through the exciting world of Quarkus in his upcoming webinar.

We ask, Martin answers all the big questions, and by the end – you from how to make Java applications run better in the cloud to how to start them up really fast, all in an easy-to-understand and fun way!

Once again, Martin - what in the Java world is Quarkus?

Think of Quarkus as the superhero of Java frameworks, swooping in to save the day with its super-fast boot times and sleek performance. It’s like giving your Java applications a dose of super-serum to make them cloud-native and Kubernetes-friendly.

To give you the final definition: Quarkus is a modern Java framework that allows developers to create efficient and fast microservices and serverless applications, with a focus on reducing memory usage and startup time, making it ideal for cloud deployments.

Is Quarkus just another framework I need to learn, or is it actually going to make my life easier?

Oh, it’s definitely here to make your life easier, not to add to your developer’s toolbox of doom. Imagine writing code and seeing changes instantly, without the agonizing wait. That’s Quarkus for you—like having a fast-forward button for your coding!

Will Quarkus work with my existing Java knowledge, or do I need to start from scratch?

No need to toss your Java knowledge out the window! Quarkus builds on what you already know and love about Java, just turbocharging it for the cloud era. It’s like your Java skills got an upgrade!

I heard Quarkus works well with GraalVM. What's the deal there?

Yep, Quarkus and GraalVM are the dynamic duo! GraalVM allows Quarkus apps to compile into native executables, which means even faster startup times and lower memory usage. It’s like your app goes on a super-efficient diet and exercise regimen.

Can Quarkus really make my applications start that much faster?

Absolutely! Quarkus is like a shot of espresso for your applications. You’ll go from sleepy, slow startups to lightning-fast launches, making your apps feel as if they’ve just sprinted out of the blocks.

What about the learning curve? Is Quarkus developer-friendly for newbies and veterans alike?

Quarkus is designed with all levels of Java developers in mind. It has a gentle learning curve for newbies while offering deep and powerful features that veterans will appreciate. Think of it as a friendly tour guide through the land of cloud-native Java, offering helpful tips and snacks along the way.

What about Quarkus and Kubernetes?

Quarkus and Kubernetes are like best buddies in the cloud-native playground. Quarkus is built with Kubernetes in mind, so it’s like it inherently knows how to scale, heal, and play nicely with containers, making your deployment a breeze.

So where can I learn more about this?

As a matter of fact – I have a webinar on the topic coming up, in which I’ll show you around Quarkus, some of its features, show you how it can make your life easier, and why it might be a better choice for you in your Java endeavors!

Sign up here –> Webinar – Quarkus: Crafting the Future of Java in the Cloud

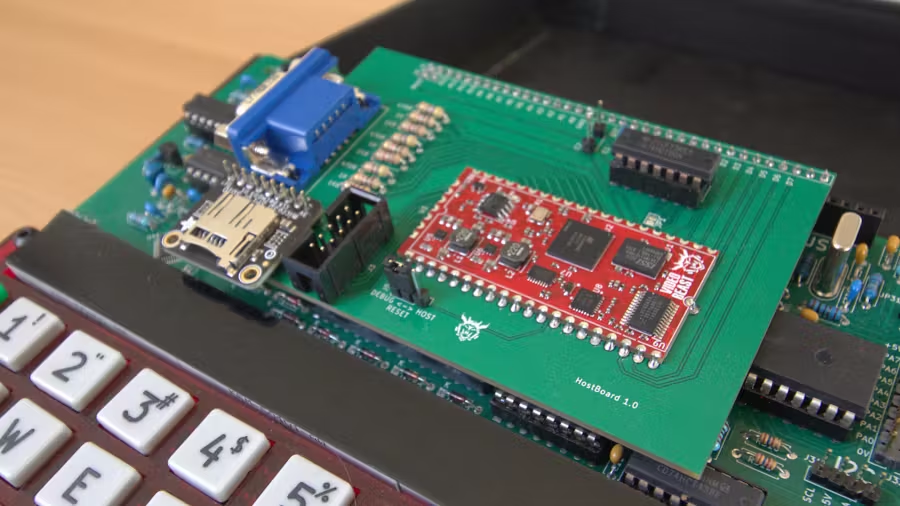

FPGA in 2023: A Comprehensive Overview of Key Milestones and Innovations

FPGA in 2023: A Comprehensive Overview of Key Milestones and Innovations

Last year heralded a new era in FPGA technology, marked by groundbreaking advancements that extended from sophisticated acoustic imaging to immersive augmented reality, high-speed data transmission, and beyond. This overview delves into these transformative innovations, showcasing their impact across various sectors and highlighting the expanding capabilities of FPGA applications.

As we navigate through these pivotal developments, we uncover how they have not only advanced technological frontiers but also set new benchmarks for future innovations in the digital and analog realms.

IT Labs’ experts have picked the most notable events in the world of FPGA – the ones that carry the potential to have a significant impact on the industry, breakthroughs, events – providing their analysis and the long-lasting impact they might have on the industry.

Scroll on down for the full take!

January - SM Instruments launches an FPGA real-time sound camera with high-speed beamforming technology

SeeSV – S206 camera provides detection of highly transient noise sources using a high-speed FPGA-based beamforming technology.

The beamforming technology is used to improve signal-to-noise ratio of received signals, eliminate undesirable interference sources, and focus transmitted signals to specific location. This technology works using Wi-Fi.

The detection of these sources is being done by using a MEMS microphone with 96 channels that immediately detect small annoying sounds such as buzz, squeaks, rattle. Also, this camera can provide visualization of noise, vibration and/or harshness sources. This camera can capture 25 images per second and comes with real-time sound imaging software that helps in visualizing the sounds.

Kicking off the year, January saw the debut of the SeeSV-S206 by SM Instruments, a state-of-the-art sound camera that integrates FPGA technology with beamforming to revolutionize noise detection and analysis. This tool stands out for its precision in pinpointing and visualizing elusive sound sources, offering a significant advantage to industries like automotive and manufacturing where accurate noise identification is critical.

Short overview

The SeeSV-S206 is equipped with high-speed beamforming technology, powered by FPGA, which enhances the signal-to-noise ratio of received signals and eliminates unwanted interference. This technology, coupled with Wi-Fi connectivity, enables the camera to pinpoint elusive noise sources with remarkable precision. Utilizing a 96-channel MEMS microphone, the camera can swiftly detect small, bothersome sounds such as buzzes, squeaks, and rattles. Additionally, its real-time sound imaging software allows for detailed visualization of noise, vibration, and harshness sources at a capture rate of 25 images per second.

Impact

In the automotive industry, the SeeSV-S206 revolutionizes noise detection by facilitating the identification of engine or gear malfunctions and the detection of air or gas leaks. This capability streamlines maintenance processes, enhances quality control measures, and improves overall operational efficiency. Beyond automotive applications, the technology holds promise for use in various industries where precise noise detection is essential for resolving issues and optimizing performance.

February - Augmented reality monocle by Brilliant Labs - an innovative feature for an imaginative hacker

February was marked by the unveiling of a cutting-edge, open-source augmented reality monocle. This innovative device, equipped with a comprehensive suite of features including FPGA technology, offers a customizable AR experience that seamlessly integrates with everyday life. From enhancing leisure activities to revolutionizing practical tasks, the monocle’s adaptability and wide range of applications set a new standard for personal tech in our daily routines.

Short overview

The augmented reality monocle is a multifunctional device comprising a display, camera, microphone, PCB, microcontroller, FPGA, and battery. It seamlessly connects to mobile phones via Bluetooth, allowing for versatile integration with existing technologies. The device’s functionality is highly adaptable, with open-source software like MicroPython enabling users to reprogram it to suit their specific needs.

The display functionality, powered by the FPGA, can be configured for various purposes such as computer vision, AI processing, or graphic acceleration, offering a wide range of applications. By clipping onto existing eyewear, the monocle combines a single-lens display with AR technology, overlaying digital information onto the user’s real-world view. This versatility opens endless possibilities, from browsing the web while cooking to navigating with maps while biking, real-time language translation, healthcare monitoring, and QR code/barcode detection.

Impact

The augmented reality monocle’s adaptability and functionality make it a valuable tool in everyday life. Its ability to seamlessly integrate digital information into real-world scenarios enhances productivity, convenience, and safety across various activities. Whether used for leisure, communication, or practical tasks, the monocle represents a significant advancement in AR technology, catering to both tech enthusiasts and mainstream users alike.

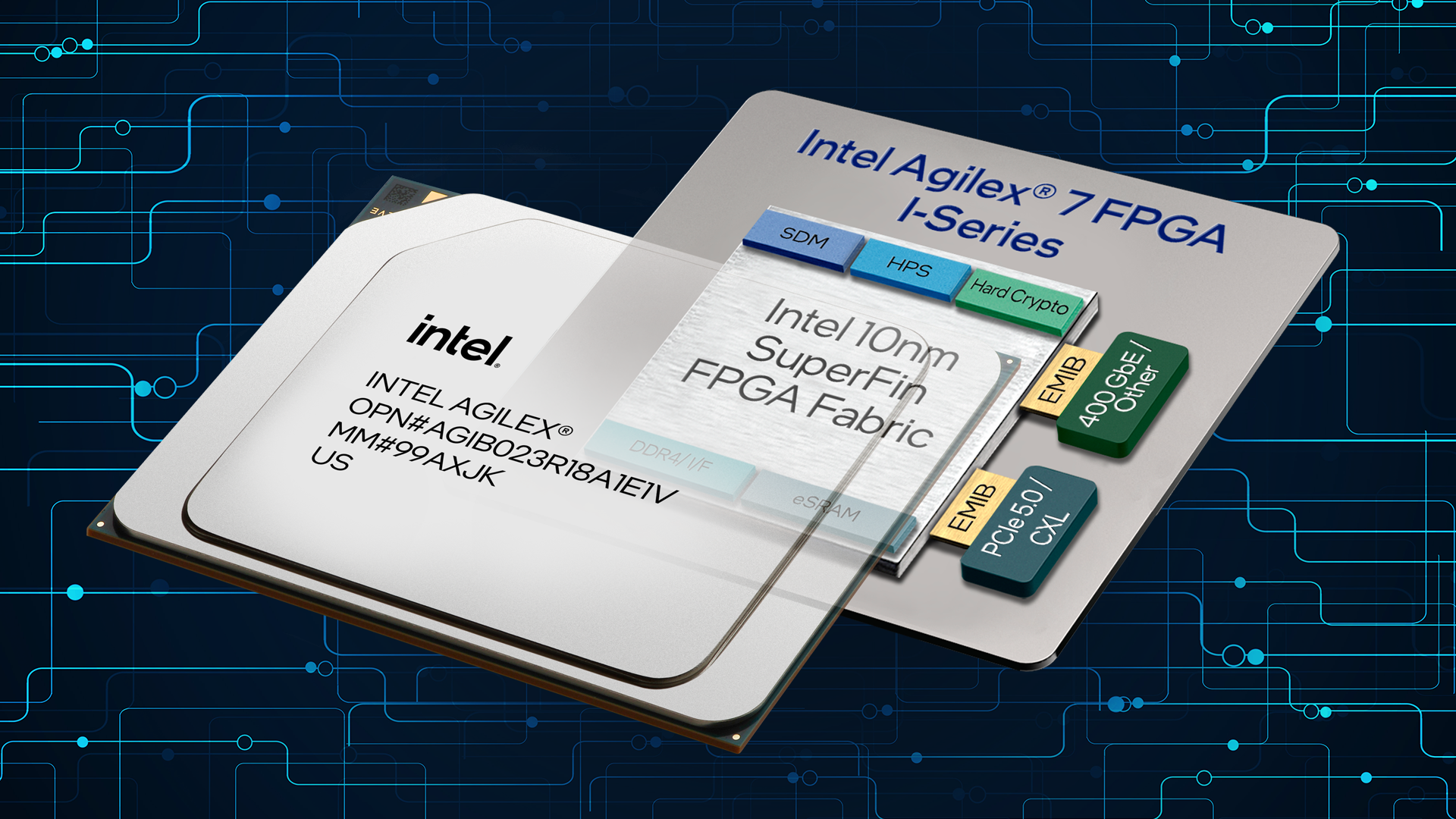

March - Announcement by Intel about their new, state-of-the-art, Agilex 7 FPGA based transceiver

March heralded a significant leap in FPGA technology with Intel’s launch of the Agilex 7 FPGA-based transceiver. This announcement introduced a device capable of delivering unprecedented data rates, tailored for a variety of applications from cloud computing to 5G networks. The Agilex 7’s cutting-edge design and multiprotocol support promise to revolutionize connectivity and processing capabilities, offering a versatile solution for an array of high-demand tech environments.

Short overview

The Agilex 7 FPGAs leverage cutting-edge 10 nanometer SuperFin process technology, offering multiprotocol capabilities and support for faster data rates. With transceivers capable of delivering speeds of up to 116 gigabits per second (Gbps) and hardened 400 gigabit Ethernet (GbE) intellectual property (IP), these devices enable customers to create new connectivity topologies within a single device.

Impact

The introduction of Agilex 7 FPGAs brings forth a myriad of benefits for various industries. With its flexibility and high-performance capabilities, it addresses the demands of bandwidth-intensive applications, networking, cloud computing, embedded systems, and compute-intensive tasks. This translates to lower costs and enhanced efficiency for network operators, cloud providers, and enterprise organizations. Furthermore, Intel’s ongoing optimization of FPGA technology ensures its applicability across diverse sectors, including optical networking, data centers, broadcast studios, medical testing facilities, and 5G networks, among others.

April – Speeding up FPGA development by using Rapid Silicon’s new RapidGPT, an AI chatbot-based FPGA design tool

April saw the introduction of Rapid Silicon’s RapidGPT, a revolutionary AI chatbot designed to streamline FPGA development. This tool, with its conversational interface and advanced features like code autocompletion and optimization, promises to significantly boost the efficiency of FPGA designers, potentially transforming the landscape of hardware development in the industry.

Short overview

RapidGPT is an intelligent software interface, functioning via a chat-based platform, tailored to FPGA chip designers. Unlike conventional development tools, RapidGPT employs natural language processing to facilitate tasks such as code autocompletion, design assistance, debugging, optimization, and analysis. Its conversational interface simplifies the complexities of FPGA and HDL development, offering a user-friendly experience akin to everyday chat-based applications.

Impact

The introduction of RapidGPT marks a significant advancement in FPGA development methodology. By enhancing developer productivity and reducing time-to-market for FPGA-based products, it addresses a critical need in the industry. Furthermore, RapidGPT’s chatbot fills a void in the FPGA and HDL community, as this software has the potential to accelerate innovation and foster growth within the FPGA industry, leading to the creation of more efficient and advanced FPGA-based solutions.

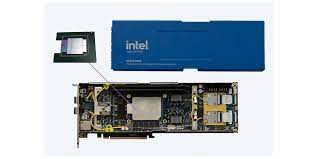

May – First FPGA with R-Tile chiplet launched by Intel is the new Agilex 7 FPGA

May brought a groundbreaking development from Intel with the release of the Agilex 7 FPGA, featuring the R-Tile chiplet. This innovation sets a new standard for bandwidth capabilities in the FPGA arena, promising enhanced performance and efficiency for data-intensive sectors. The integration of PCIe 5.0 and CXL positions the Agilex 7 as a pivotal component in high-performance computing environments, offering a significant leap forward in data processing and connectivity.

Short overview

Intel’s Agilex 7 FPGA, equipped with the R-Tile chiplet, is the first of its kind to support PCIe 5.0 and CXL interfaces, delivering unparalleled bandwidth and performance. Compared to other FPGA products, Agilex 7 offers twice the PCIe 5.0 bandwidth and four times the CXL bandwidth, making it a standout solution for high-performance computing applications. This integration enables seamless connectivity with other Intel processors, such as the 4th Gen Intel Xeon Scalable processors, facilitating high-performance workloads across various industries.

Impact

The introduction of Agilex 7 with R-Tile chiplet technology has far-reaching implications across multiple sectors. By addressing time, budget, and power constraints, it offers a cost-effective solution for industries reliant on data centers, telecommunications, and financial services. The integration of R-Tile technology enhances power efficiency and data throughput, leading to lower Total Cost of Ownership (TCO) for high-performance installations.

Moreover, the collaboration between Intel and other industry players, as demonstrated by the white paper from Meta and the University of Michigan, underscores the potential for significant performance improvements in various computing tasks, further driving innovation and efficiency in FPGA-based systems.

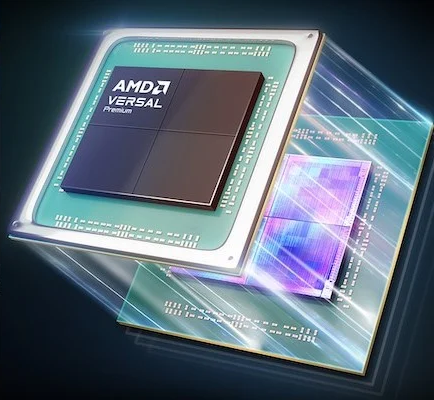

June – AMD launches world’s largest FPGA-Based adaptive SoC for emulation and prototyping

June marked a significant milestone with AMD’s release of the Versal Premium VP1902, the largest FPGA-based adaptive SoC designed for emulation and prototyping. This chip, tailored for accelerating the development of AI, autonomous vehicles, and other cutting-edge technologies, promises to revolutionize the design and verification process in the semiconductor industry.

Short overview

The AMD Versal Premium VP1902 SoC represents a significant advancement in chip design and verification. With its emulation-class, chiplet-based architecture and 18.5M logic cells, it streamlines the validation of complex semiconductor designs, particularly in fields like AI and autonomous vehicles. Equipped with robust debugging capabilities, it ensures efficient pre-silicon verification and concurrent software development. Moreover, its integration into AMD’s development tools enhances efficiency with features like automated design assistance and real-time debugging.

Impact

The VP1902 SoC’s introduction accelerates the time-to-market for new technologies while maintaining project timelines and budgets. By expediting chip development and enhancing debugging capabilities, it fosters innovation in AI, autonomous vehicles, and other emerging fields. Its integration with AMD’s tools empowers designers to iterate designs faster, driving progress and efficiency in the semiconductor industry

July – Accelerating the creation of automotive system designs and applications using Lattice Drive

In July, Lattice Semiconductor introduced Lattice Drive, a software solution designed to expedite the development of automotive infotainment and ADAS systems. This innovation is particularly timely as the automotive industry evolves, incorporating an increasing array of displays, sensors, and other technologies.

Short overview

Lattice Drive offers a comprehensive solution stack designed specifically to address the challenges in automotive system design. It facilitates advanced display bridging and processing, catering to the demands of modern automotive architectures requiring efficient data handling and processing. Leveraging the inherent flexibility and versatility of FPGAs, Lattice Drive enables the integration of multiple FPGAs within a vehicle, each powering different applications and functionalities.

Impact

By harnessing the advantages of FPGA technology through Lattice Drive, automotive developers can significantly reduce the time-to-market for new systems. The software’s ability to leverage FPGA flexibility and perform diverse functions enhances system capabilities while maintaining a compact footprint. This acceleration in system development not only meets the evolving demands of the automotive industry but also contributes to improving safety and enhancing the in-car experience for users.

August – Astonishing graphics achieved by Andy Toone’s VideoBeast coprocessor for Eight-Bit retro systems

In August, the gaming community witnessed a significant leap forward with the introduction of VideoBeast by Andy Toone, a device poised to merge the nostalgic charm of eight-bit microcomputers with the dynamic visuals of contemporary gaming through its FPGA-based technology.

Short overview

VideoBeast, developed by vintage gaming enthusiast Andy Toone, serves as a “monster graphics” coprocessor tailored for eight-bit microcomputers. Equipped with advanced features such as 512 colors, intricate sprite handling, widescreen resolutions, and 1MB of video RAM, VideoBeast leverages FPGA technology to overcome the architectural limitations of old video consoles. Compatible with systems utilizing 4k, 8k, or 16k static RAM chips, VideoBeast recreates the power of discrete logic video cards in a compact and cost-effective form factor. It offers six independently positionable layers, various text and bitmap options, tile rendering with full scrolling, and sprite functionalities, all customizable to deliver optimal gaming experiences.

Impact

VideoBeast not only preserves but also enhances the vintage gaming experience, allowing a new generation of gamers to enjoy classic games with improved graphics. By simplifying programming complexities and offering ample video RAM for pre-loading and enhancing visuals, VideoBeast empowers developers to create immersive gaming experiences on eight-bit systems. This device bridges the gap between retro gaming nostalgia and modern graphical expectations, inviting more enthusiasts to explore and appreciate the rich history of vintage gaming.

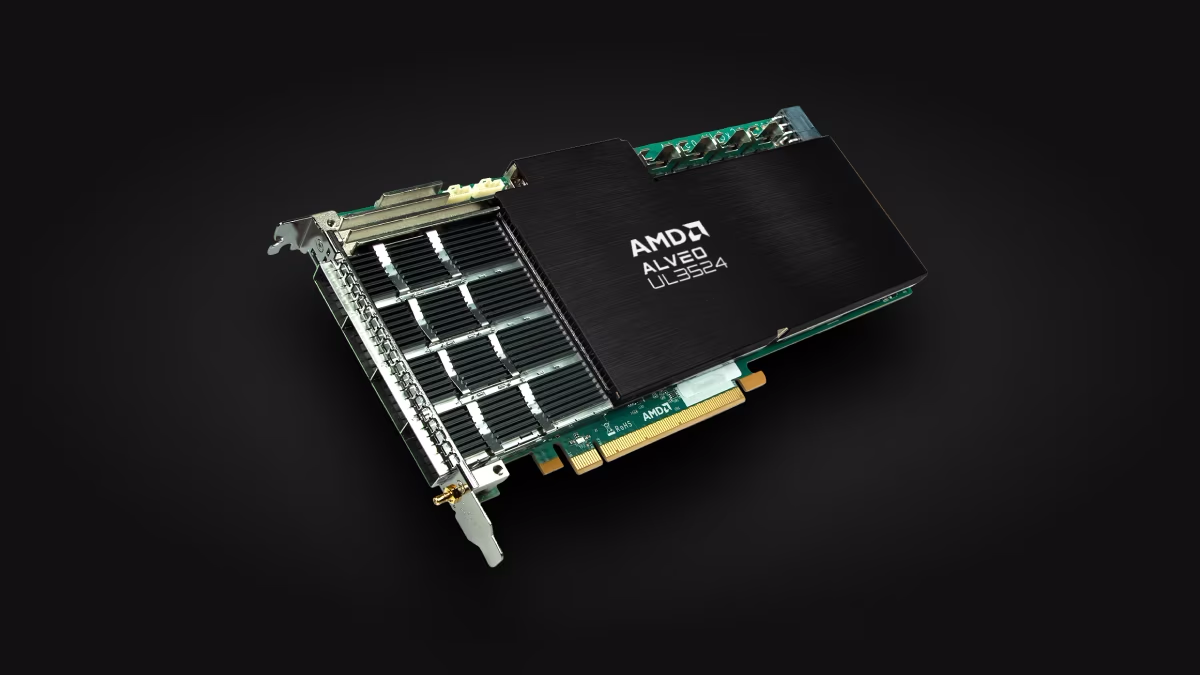

September - AMDs Ultra-low latency electronic trading accelerator based on 16nm Virtex UltraScale+ FPGA

In September, AMD unveiled the Alveo UL3524 accelerator card, a groundbreaking development in ultra-low latency electronic trading powered by the 16nm Virtex UltraScale+ FPGA technology. This innovation significantly accelerates trading capabilities, setting a new benchmark for performance in the financial sector.

Short overview